Synthesis: Designing Smarter Groups

We've covered a lot of ground: the wisdom of crowds, prediction markets, superforecasting, groupthink, swarm intelligence, epistemic democracy. Now let's pull the threads together into practical principles for making groups smarter.

The core insight is simple: collective intelligence is engineerable. Groups can be smarter than individuals—or dumber. The difference isn't about who's in the group. It's about how the group is structured.

This isn't abstract theory. Organizations make collective decisions every day: hiring committees, strategic planning sessions, risk assessments, product launches. Most of these processes are terrible. They're designed for accountability or politics or tradition—not for actually producing good decisions. The science of collective intelligence tells us how to do better.

The Four Conditions, Revisited

Throughout this series, we've seen the same conditions appear in different contexts:

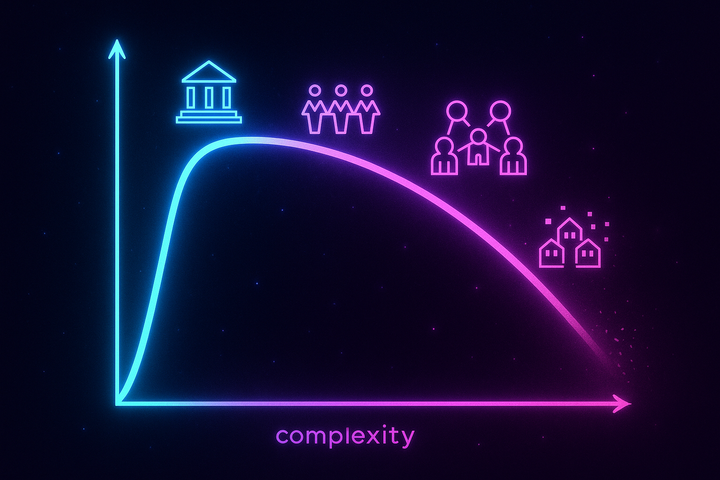

Diversity. Different people with different information, perspectives, and ways of thinking. Not demographic diversity as an end in itself, but cognitive diversity—different errors that cancel rather than compound.

Independence. People forming their own judgments rather than copying each other. The moment everyone watches what everyone else is doing, the crowd becomes a herd.

Decentralization. Distributed knowledge being used locally rather than centralized and homogenized. The person on the ground knows things the headquarters can't.

Aggregation. A mechanism for combining individual judgments into collective output. Averaging, voting, markets, deliberation—the method matters, and different methods work for different problems.

These conditions are the recipe. When they hold, collective intelligence emerges. When they fail, groups become mobs, committees become groupthink factories, and markets become bubbles.

The engineering challenge is creating and maintaining these conditions in real-world settings where social dynamics, institutional pressures, and human nature constantly push against them.

Design Principle 1: Collect Judgments Before Discussion

The simplest, highest-impact intervention is to collect individual estimates before any group discussion.

When discussion comes first, early speakers anchor everyone else. Social pressure pushes toward consensus. Independence dies within minutes. The "group decision" is really just the opinion of whoever spoke first, with everyone else's private information suppressed.

Instead: Have each person write down their estimate, prediction, or recommendation privately. Collect these before anyone speaks. Then share them all at once, anonymized if necessary.

This preserves independence for the initial estimates. Discussion can still follow—to share information and arguments—but the discussion starts with visible diversity rather than premature convergence.

Prediction markets work this way naturally: you can't see others' bets until you've placed your own. Superforecasting tournaments do this deliberately. The Delphi method institutionalizes it with multiple rounds of independent estimation followed by feedback.

Implementation: In your next meeting where a decision or estimate is needed, have everyone write their view on paper (or in a private chat) before any discussion begins. Share all views simultaneously. Then discuss. Compare the quality of decisions made this way versus your normal process.

Design Principle 2: Assign Devil's Advocates

Make dissent legitimate by assigning it as a role.

In cohesive groups, disagreeing feels socially costly. People self-censor to preserve harmony. Groupthink emerges not because anyone wants bad decisions, but because no one wants to be the troublemaker.

Assigning a devil's advocate changes the dynamics. Now disagreement isn't betrayal—it's doing your job. The assigned dissenter can raise concerns without being seen as disloyal because raising concerns is their explicit role.

But there's a catch: pro forma devil's advocacy doesn't work. If everyone knows the devil's advocate doesn't really believe their objections, the objections get dismissed. Effective devil's advocacy requires:

- Genuine effort to find the best counterarguments - Explicit norm that the advocate will be taken seriously - Sometimes rotating the role so it's not always the same person - Actually changing course when the advocate's objections are compelling

Kennedy learned this after the Bay of Pigs. During the Cuban Missile Crisis, his brother Robert played devil's advocate roles, and ExComm members were encouraged to take different sides across meetings. The same team that produced the Bay of Pigs produced the successful Missile Crisis resolution—with different processes.

Implementation: In your next high-stakes decision, explicitly assign someone to argue against the emerging consensus. Give them preparation time. Take their arguments seriously enough to change your mind if they're good.

Design Principle 3: Use Appropriate Aggregation Mechanisms

Different problems need different aggregation methods.

If you're trying to estimate a quantity (How many customers will we have next quarter? What's the probability this project will be late?), averaging works well. Independent estimates, averaged, tend to be more accurate than any individual estimate.

If you're choosing among discrete options (Should we pursue Strategy A or Strategy B?), voting or ranking methods work better. Consider intensity-weighted voting if some people are much more confident than others.

If you need continuous tracking of probability estimates, markets or betting pools create ongoing accountability and enable rapid updating.

If you need to share information and generate new options, structured deliberation is necessary—but deliberation should happen after independent estimates, not before.

Never use deliberation alone for decisions that have correct answers. Discussion is good for generating options and sharing information. It's bad for converging on truth because social dynamics contaminate the aggregation.

Implementation: Match your mechanism to your task. For estimates: average independent inputs. For choices: vote after information sharing. For ongoing forecasts: create internal prediction markets. For brainstorming: deliberate. For final decisions: aggregate, don't discuss.

Design Principle 4: Preserve Cognitive Diversity

Diverse perspectives are the raw material of collective intelligence. Protect them.

Hiring for "culture fit" often means hiring people who think like the existing team. This feels comfortable but destroys cognitive diversity. You end up with a room full of smart people who all have the same blind spots.

Cognitive diversity means: - Different disciplinary backgrounds - Different life experiences - Different problem-solving styles - Different network connections - People who will disagree with each other

This is uncomfortable. Diverse teams have more conflict, more friction, more difficulty reaching consensus. But that difficulty is the feature, not the bug. Easy consensus often means everyone's making the same error. Hard-won consensus means the group has actually grappled with different perspectives.

The research is clear: homogeneous teams feel better but perform worse on complex problems. Diverse teams feel more difficult but actually solve problems better. Our intuitions about what makes a good team are wrong. Trust the data, not the vibe.

Implementation: Actively recruit people who think differently. When you notice everyone agreeing easily, ask: "Are we all making the same mistake?" Reward constructive disagreement. Measure not just whether people get along but whether they challenge each other.

Design Principle 5: Create Feedback Loops

You can't improve what you don't measure.

Most organizations make predictions and decisions and then... never check. The forecasts disappear into the void. The decisions are made and rationalized regardless of outcome. No one tracks accuracy; no one learns.

Superforecasters get good by getting feedback. They make specific, measurable predictions. They track whether those predictions came true. They study their hits and misses. They learn what they're good at and what they're not.

Organizations could do this too. Track your forecasts. Score your predictions. Identify who in your organization actually predicts well and who just sounds confident. Promote the former; train or demote the latter.

Implementation: Start logging predictions. For any significant forecast or decision rationale, write down what you expect to happen and when. Revisit periodically. Calculate accuracy. Learn from the gaps.

This is the simplest high-value intervention for individual and organizational improvement: keep score. It's remarkable how rare this is. Most organizations make thousands of implicit predictions and check almost none of them. They're flying blind by choice.

Design Principle 6: Reduce Social Stakes

The more a judgment feels like a social loyalty test, the less accurate it becomes.

When expressing an opinion signals tribal membership, opinions stop being judgments and start being badges. Political polarization destroys epistemic quality because every question becomes a question about identity. "Is climate change real?" becomes "Which team are you on?"

In organizational contexts, similar dynamics apply. If disagreeing with the boss is career-limiting, no one will disagree with the boss—even when the boss is wrong. If challenging the company strategy signals disloyalty, the strategy will never be challenged—even when it should be.

Implementation: Anonymize input where possible. Separate idea evaluation from idea authorship. Explicitly protect and reward constructive disagreement. Make it safe to be wrong. Model changing your mind in response to evidence.

The Meta-Principle

All these specific principles serve a single meta-principle: Treat collective decision-making as a design problem, not a social ritual.

Most meetings, committees, and planning processes exist for reasons that have nothing to do with decision quality. They're about inclusion, accountability, socialization, politics. These functions matter—but they're not the same as making good decisions.

If you want good collective decisions, you need to design for them. That means asking: Are we preserving diversity? Are we protecting independence? Are we using appropriate aggregation? Are we getting feedback?

The science of collective intelligence has answers. The question is whether we'll use them.

What's at Stake

The challenges we face—climate change, pandemic response, AI governance, geopolitical instability—require good collective decisions at scale. Individual humans can't solve these problems alone. But groups of humans often make decisions worse than any individual would.

We know how to do better. Prediction markets, structured estimation, deliberative polling, superforecasting techniques—these work. They're not perfect, but they're vastly better than the status quo of committees, punditry, and meetings without structure.

The obstacle isn't knowledge. It's implementation. Organizations don't want to admit that their processes are broken. Leaders don't want to be told that markets know more than they do. Experts don't want to compete with amateurs.

But the decisions we make collectively will determine our collective future. We can continue making them badly, deferring to confidence over calibration, rewarding style over substance, and pretending that conventional processes produce wisdom.

Or we can engineer for collective intelligence. Create diversity. Protect independence. Aggregate properly. Learn from feedback.

The groups that do this will outperform the groups that don't. That's not theory—it's the empirical record. The question is whether we care enough about getting things right to change how we operate.

The Takeaway

Collective intelligence is designable. Groups can be smarter than individuals—or dumber—depending on their structure and processes.

The key principles: collect judgments before discussion, assign devil's advocates, use appropriate aggregation mechanisms, preserve cognitive diversity, create feedback loops, and reduce social stakes.

These aren't complicated. They're just not how most organizations operate. Implementing them requires admitting that current practices are broken—and that good decisions matter more than comfortable processes.

The science exists. The tools exist. What remains is the will to use them.

Further Reading

- Surowiecki, J. (2004). The Wisdom of Crowds. Doubleday. - Tetlock, P. E., & Gardner, D. (2015). Superforecasting. Crown. - Sunstein, C. R., & Hastie, R. (2015). Wiser. Harvard Business Review Press. - Page, S. E. (2007). The Difference. Princeton University Press.

This concludes the Collective Intelligence series. For more on how groups and societies make decisions, see the Anthropology of Institutions series.

Comments ()