The Machines Are Talking

In 2016, researchers discovered that during the Brexit referendum, over a third of the Twitter conversation was generated by automated accounts. Not humans expressing opinions—software executing influence operations at scale.

Computational propaganda is the use of algorithms, automation, and big data to shape public opinion. It's influence operations industrialized—the same manipulation techniques humans have used for centuries, now executed by machines at speeds and scales impossible for humans alone.

This changes the game. You're not just competing with other humans for attention. You're competing with software designed specifically to capture and redirect it.

The Bot Ecosystem

Not all automated accounts are the same. The ecosystem has evolved sophisticated specialization.

Spam bots are the primitive ancestors—accounts that blast out content indiscriminately. They're easy to detect: high volume, no engagement, obvious automation markers. Platforms catch most of them.

Amplification bots don't create content; they boost it. They retweet, like, and share content from human accounts, creating artificial popularity signals. This triggers algorithmic amplification—the platform's own systems then promote the content further. The bot creates the spark; the algorithm fans the flames.

Sockpuppets are fake accounts operated by humans, often in coordinated networks. Each account has a persona—a profile picture, a posting history, a personality. They're labor-intensive but more convincing than automated accounts.

Cyborgs are the hybrid: human-operated accounts using automation for some tasks. A human writes the tweets; software handles the scheduling, the following, the engagement farming. The human provides authenticity; the machine provides scale.

Astroturf networks coordinate all of the above. Dozens or hundreds of accounts, some automated and some human-operated, working together to simulate grassroots movements. The name comes from AstroTurf—artificial grass designed to look real.

The sophistication keeps increasing. Early bots were obvious. Current operations are designed specifically to evade detection, mimicking human behavior patterns closely enough to fool both platforms and researchers.

The Influence Operation Playbook

Computational propaganda operations follow recognizable patterns.

Phase 1: Network building. Before an operation, accounts need to exist and look legitimate. This means creating accounts months or years in advance, building follower networks, establishing posting histories. The accounts lie dormant or post innocuous content until activated.

Phase 2: Narrative seeding. When the operation begins, coordinated accounts introduce a narrative—often through apparently independent "discoveries" of the same information. Multiple accounts "find" the same story; it appears to spread organically.

Phase 3: Amplification. Once seeded, the narrative gets boosted. Amplification bots drive engagement numbers up. Coordinated accounts all share and comment. This triggers algorithmic promotion, extending reach far beyond the original network.

Phase 4: Mainstream crossover. The goal is often to get the narrative picked up by legitimate media or real influencers. Once a journalist or politician tweets about it, the operation has succeeded—it's now part of genuine discourse.

Phase 5: Persistence. After initial spread, continued low-level activity keeps the narrative alive. Whenever someone searches the topic, they find years of apparent discussion.

This playbook has been documented in operations from Russia, China, Iran, Saudi Arabia, and numerous other state and non-state actors. The techniques are converging toward a common standard because they work.

Scale Changes Everything

Human propaganda has always existed. What's new is the scale.

A single human can operate dozens of sockpuppet accounts. Software can operate thousands. A state-sponsored operation can deploy millions.

The 2016 Russian operation used approximately 50,000 bot accounts that generated 2.1 million tweets. The human staff numbered in the hundreds. The reach extended to 126 million Americans on Facebook alone.

Chinese operations have deployed millions of accounts. Researchers identified 448 million posts annually just from the "50 Cent Army"—paid human commenters, not even counting automated accounts.

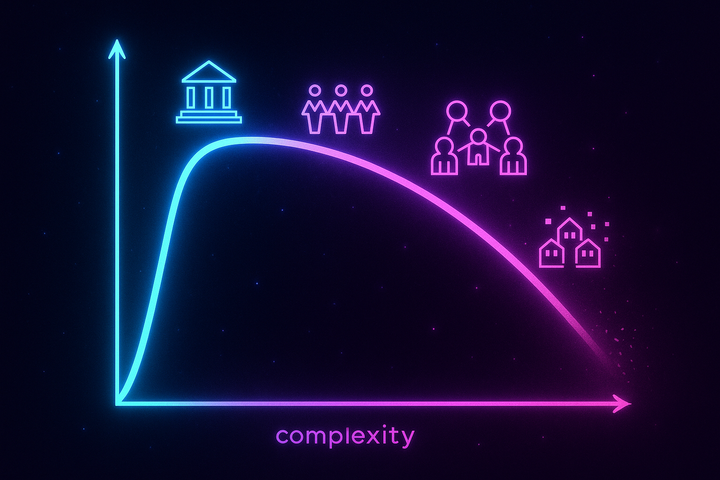

Scale creates qualitative changes. When you see the same opinion from a thousand accounts, it feels like consensus. When a hashtag trends, it feels organic. When your feed fills with a narrative, it feels like the world believes it.

Humans evolved to read social proof. A million years of evolution trained us to trust what the tribe thinks. Computational propaganda hacks that instinct by simulating tribes that don't exist.

The Attention Economy Vulnerability

Computational propaganda exploits specific features of social media architecture.

Engagement metrics are gameable. Platforms use likes, shares, and comments as signals of content quality. But these metrics measure engagement, not accuracy or importance. Bots can generate engagement at will.

Algorithmic amplification is predictable. Researchers have reverse-engineered what triggers platform algorithms. Once you know the rules, you can game them. Post at optimal times. Generate early engagement. Use triggering keywords. The algorithm responds the same way to authentic popularity and manufactured popularity.

Verification is asymmetric. Creating a fake account takes seconds. Proving an account is fake takes investigation. Creating a false claim takes seconds. Debunking it takes research. The offense is cheaper than the defense.

Human attention is limited. Even if only 5% of the accounts pushing a narrative are fake, they can dominate the conversation. They're always online. They never get tired. They respond to every post. Real humans can't match the persistence.

The platforms aren't neutral battlefields. Their architecture advantages the attacker.

Detection and Its Limits

Platforms and researchers have developed detection methods.

Behavioral analysis looks for non-human patterns: posting at inhuman speeds, posting in coordinated bursts, following the same accounts as other suspected bots, identical phrasing across accounts.

Network analysis maps relationships between accounts. Coordinated operations often show distinctive network structures—accounts that all follow each other, accounts that all share from the same sources, clusters that activate simultaneously.

Content analysis examines what's being said. Propaganda operations often use recycled content, identical talking points, or machine-generated text with telltale patterns.

Machine learning classifiers combine these signals. Platforms claim to remove millions of fake accounts monthly.

But detection faces fundamental limits.

Adversarial evolution. When platforms publish detection methods, operators adapt. Each improvement triggers counter-improvement. The arms race never ends.

False positives. Aggressive bot removal catches real accounts. Real humans sometimes post rapidly, sometimes use similar language, sometimes share the same content. Every false positive erodes user trust.

Resource asymmetry. Platforms have large but finite moderation resources. Attackers can create accounts faster than platforms can remove them. The economics favor the attacker.

The cyborg problem. Hybrid accounts with real humans in the loop are genuinely difficult to distinguish from authentic accounts. How do you tell the difference between someone who really believes something and someone paid to pretend they believe it?

Detection is necessary but insufficient. We can't moderate our way out of computational propaganda.

State-Sponsored Operations

The most sophisticated operations come from nation-states with intelligence resources.

Russia's Internet Research Agency pioneered large-scale social media manipulation. Their operations targeted U.S. elections, European politics, and numerous other objectives. They operated sock puppet accounts posing as Americans across the political spectrum—not just supporting one side, but inflaming both sides to increase polarization.

China's operations focus on different objectives: suppressing discussion of Taiwan, Tibet, and Xinjiang; promoting positive narratives about China; and harassing critics. Their scale is massive but their techniques are often less subtle.

Iran's operations target Middle Eastern politics and U.S. policy toward Iran. They've been caught posing as American progressives critical of U.S. foreign policy.

Saudi Arabia's operations have targeted dissidents, journalists, and regional rivals. The murder of Jamal Khashoggi revealed extensive use of Twitter manipulation.

These aren't isolated incidents. Every major power now has computational propaganda capabilities. It's become as standard as having a foreign ministry or an intelligence agency.

The goal isn't always to convince. Sometimes it's to confuse—to fill the information environment with so much noise that citizens can't figure out what's true. Sometimes it's to exhaust—to make people give up on understanding public affairs. Sometimes it's to polarize—to make domestic enemies hate each other more than they distrust foreign interference.

Domestic Operations

Foreign operations get the headlines, but domestic computational propaganda may be more consequential.

Political campaigns use targeting and automation. The line between legitimate digital campaigning and manipulation is contested. Campaign operatives use A/B testing at scale, micro-targeted ads, and coordinated messaging that blurs into manipulation. When does "getting out the message" become "manufacturing consent"?

Corporate interests run influence operations. Campaigns about climate, pharmaceuticals, and regulation often involve coordinated inauthentic behavior. Companies hire reputation management firms that deploy the same techniques as state actors—fake reviews, astroturfed grassroots movements, coordinated attacks on critics.

Domestic activists sometimes use bot networks to amplify their causes. The same techniques used by foreign adversaries are available to domestic actors. The tools don't discriminate between causes.

Commercial operations sell bot services to anyone who pays. Want 10,000 followers? $50. Want a trending hashtag? A few hundred dollars. Want a full influence operation? Prices vary. The marketplace is open and competitive.

The same tools that enable foreign interference enable domestic manipulation. The infrastructure doesn't care about the user's nationality or intentions. This means the threat isn't just foreign adversaries—it's anyone with modest resources and motivation to shape opinion.

The AI Escalation

Large language models change the game again.

Previous computational propaganda required either crude automation or expensive human labor. LLMs offer a middle path: content that sounds human, generated at machine speeds, at minimal cost.

Synthetic personas are now possible—AI-generated profile pictures, AI-written bios, AI-produced posts and comments. Not just bot networks but entire fake humans, complete and consistent. Each persona can have a backstory, a posting history, opinions that evolve over time—all generated automatically.

Conversational engagement at scale becomes feasible. Previously, bots could only broadcast; they couldn't really engage in back-and-forth. AI enables responsive interaction with targets. An LLM can argue, respond to objections, adjust its tactics based on the conversation—tasks that previously required human operators.

Content volume increases by orders of magnitude. An operation that might have produced hundreds of posts daily can now produce thousands—each one unique, each one tailored. The marginal cost of each additional piece of content approaches zero.

Personalized targeting becomes practical. AI can analyze a target's posting history and generate content specifically designed to appeal to that individual. Mass manipulation becomes individually customized.

We're only beginning to see AI-powered influence operations. The detection challenges are immense. If the content is indistinguishable from human-written content, behavioral and network analysis become the only detection methods.

The arms race is asymmetric. Defenders must catch every attack; attackers only need to succeed occasionally. Every improvement in AI capabilities advantages the attacker more than the defender. We're entering an era where you may never be certain whether you're talking to a human or an influence operation.

The Takeaway

Computational propaganda is propaganda industrialized—influence operations executed by software at scales and speeds impossible for humans alone.

The techniques are well-documented: bot networks, amplification, astroturfing, coordinated inauthentic behavior. The operations follow predictable playbooks. State and non-state actors deploy them routinely.

You're not just competing with other humans for your beliefs. You're competing with software designed to shape them.

Detection helps but can't solve the problem. The architecture of social media advantages attackers. The economics favor manipulation over verification.

Understanding this is necessary for navigating the information environment. When you see apparent consensus, ask whether it's real. When narratives spread rapidly, consider who benefits. When engagement seems organic, remember it might be engineered.

The machines are talking. Learning to recognize their voice is a survival skill.

Further Reading

- Woolley, S. C., & Howard, P. N. (2018). Computational Propaganda: Political Parties, Politicians, and Political Manipulation on Social Media. Oxford University Press. - DiResta, R. (2018). "Computational Propaganda." Yale Review. - Bradshaw, S., & Howard, P. N. (2019). "The Global Disinformation Order." Oxford Internet Institute.

This is Part 7 of the Propaganda and Persuasion Science series. Next: "Propaganda Defense"

Comments ()