From o1 to o3: How OpenAI Discovered Inference Scaling

From o1 to o3: How OpenAI Discovered Inference Scaling

Series: Test-Time Compute Scaling | Part: 2 of 9

The story of how OpenAI discovered test-time compute scaling isn't a story of planned research proceeding according to hypothesis. It's a story of surprising results, paradigm violations, and researchers noticing something that shouldn't have worked—but did.

By late 2023, OpenAI had a problem. GPT-4 was powerful but plateauing on certain tasks. Competitive programming. Advanced mathematics. Multi-step scientific reasoning. No matter how much the model was fine-tuned, performance on these complex reasoning tasks seemed to hit a ceiling.

The standard playbook said: train a bigger model. But what if there was another way?

This is the origin story of o1, o3, and the discovery that inference scales like training. It's the story of how thinking longer became as important as thinking bigger.

The Pre-History: Chain-of-Thought and Process Supervision

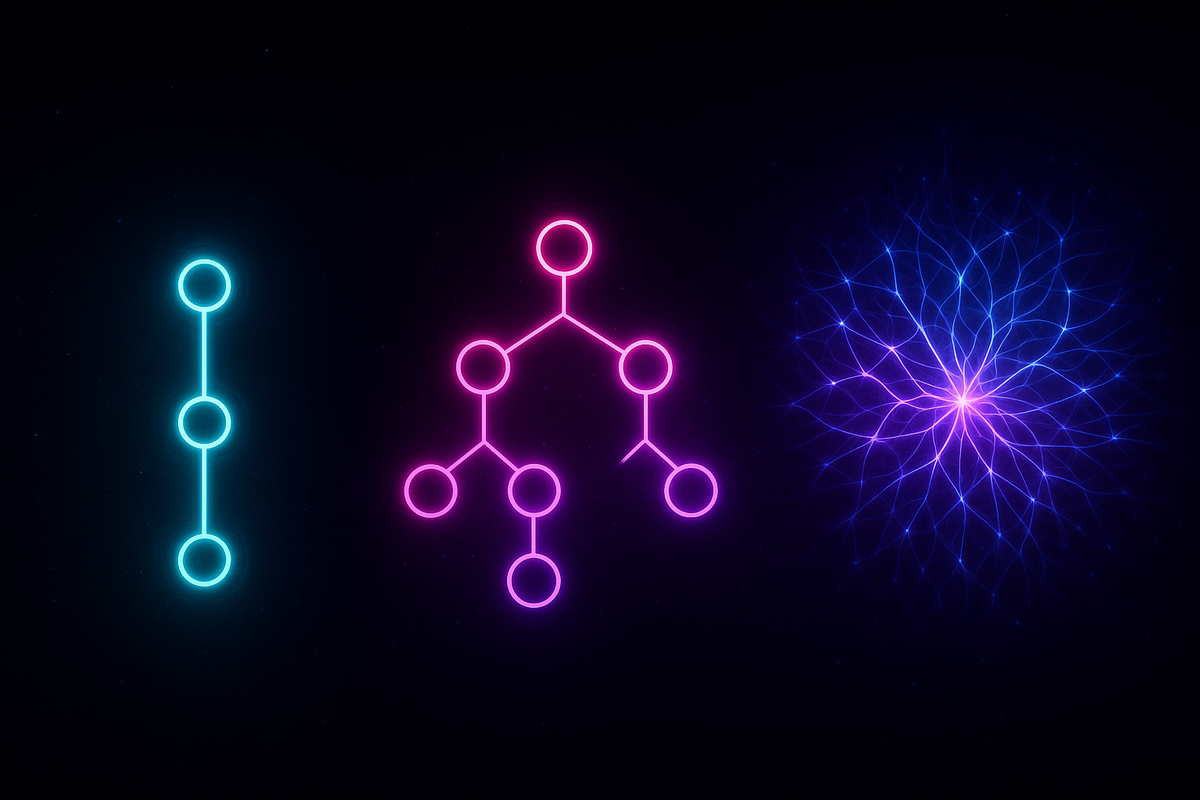

To understand o1, you need to understand what came before it. Two key developments laid the groundwork:

Chain-of-Thought Prompting (2022)

In 2022, researchers at Google discovered something simple but powerful: if you ask a language model to "think step by step" before answering, performance on reasoning tasks jumps dramatically.

This was chain-of-thought (CoT) prompting. Instead of:

- Input: "What's 37 × 24?"

- Output: "888"

You do:

- Input: "What's 37 × 24? Let's think step by step."

- Output: "First, 37 × 20 = 740. Then 37 × 4 = 148. Adding those: 740 + 148 = 888."

The intermediate reasoning made the model more accurate. But why?

The prevailing explanation was that CoT helped models access knowledge they already had. By generating intermediate steps, the model made connections it would otherwise miss. Thinking step by step wasn't creating new capability—it was surfacing latent capability.

But this framing missed something crucial: CoT was actually computation at inference time. Each reasoning step was work being done. And more work meant better results.

Process Reward Models (2023)

The second development was process supervision. Instead of training models to produce correct final answers (outcome supervision), researchers trained them to produce correct reasoning steps (process supervision).

This required generating training data where each step of reasoning was labeled as correct or incorrect. Models trained this way learned not just what the right answer was, but what good reasoning looked like.

OpenAI's math work in 2023 showed that process supervision dramatically outperformed outcome supervision. But it required something new: the model had to show its work.

This meant reasoning couldn't be implicit—it had to be explicit, step by step, checkable. And that meant spending more tokens (and therefore more compute) on the reasoning process itself.

The o1 Experiments: What Happened When They Kept Scaling Inference

Somewhere in late 2023 or early 2024, OpenAI researchers started pushing on a simple question: what if we let models think for much longer?

Not just "think step by step" in a prompt, but architecturally design the system to spend significant compute on reasoning before producing answers.

The hypothesis was incremental: maybe longer reasoning would produce marginal improvements. What they found was transformative: reasoning scaled like training.

The Basic Architecture

While OpenAI hasn't published full technical details, the rough architecture of o1 appears to work like this:

- Problem ingestion: The model receives a problem or question

- Extended reasoning phase: Instead of immediately generating an answer, the model enters a search process where it:

- Generates multiple possible reasoning paths

- Evaluates which paths seem promising

- Backtracks and explores alternatives

- Checks reasoning steps for consistency

- Answer synthesis: After extended deliberation, the model produces a final answer based on the most coherent reasoning path

- Verification (optional): The model can verify its own answer through alternative approaches

The key innovation: time spent in step 2 is tunable. You can allocate more or less compute to the reasoning phase depending on task difficulty.

The Scaling Discovery

What shocked researchers was the scaling curve. Performance didn't plateau as reasoning time increased—it kept improving according to a power law.

On competitive programming tasks (Codeforces), o1 showed:

- 3 seconds of reasoning: ~60th percentile performance

- 30 seconds of reasoning: ~80th percentile

- 5 minutes of reasoning: ~90th percentile

On mathematics (AIME):

- Baseline (no extended reasoning): ~10% of problems solved

- o1-preview: ~45% of problems solved

- o1 with extended thinking: ~70%+ of problems solved

This wasn't diminishing returns. This was a new scaling dimension. Just as doubling training compute produces logarithmic improvements, doubling inference compute produces logarithmic improvements.

And crucially: inference compute is cheaper and more flexible than training compute. You can allocate it per-query based on need.

From o1 to o1-preview to o3: The Progression

OpenAI's rollout of reasoning models happened in stages, each revealing more about the scaling properties:

o1-preview (September 2024)

The first public demonstration. o1-preview was:

- Significantly slower than GPT-4o

- Dramatically better at complex reasoning

- Capable of showing (some of) its chain-of-thought

- Limited in certain ways (no web browsing, smaller context window)

The message was clear: this was a different kind of model. Trading speed for capability through extended thinking.

Performance highlights:

- 89th percentile on Codeforces competitive programming (vs GPT-4o's 11th percentile)

- PhD-level accuracy on physics, biology, and chemistry questions

- Top 500 USA in American Invitational Mathematics Examination

This wasn't marginal improvement. This was a capability jump that rivaled what would have required training a 10x larger model.

o1 (December 2024)

The full release. o1 improved on o1-preview by:

- Faster inference (optimized reasoning overhead)

- Better calibration of when to think long vs short

- Broader capability across domains

- More refined chain-of-thought presentation

Importantly, o1 introduced reasoning effort controls—you could specify how much thinking you wanted the model to do. Low effort for simple queries, high effort for complex problems.

This made the economic trade-off explicit: think faster and cheaper, or think longer and better. Your choice.

o3 (December 2024)

The progression continued with o3, which pushed test-time compute scaling further:

- On ARC-AGI (a benchmark designed to resist memorization): o3 with high compute scored 75-88%, vastly exceeding previous AI performance

- On math competitions: Approaching top-tier human mathematician performance

- On coding: Consistently placing in the top percentiles of competitive programming

What made o3 notable wasn't just raw performance—it was the explicit demonstration that the longer you let it think, the better it gets, with no apparent ceiling.

Some problems saw improvement when given:

- 10x more compute (significant gains)

- 100x more compute (continued gains)

- 1000x more compute (still improving)

This suggested that test-time compute scaling might be practically unlimited—constrained only by economics, not by architectural limitations.

What OpenAI Learned: Key Insights

The progression from o1 to o3 revealed several deep insights about intelligence and computation:

1. Reasoning Is Compute, Not Just Retrieval

The old model: intelligence lives in the weights. Inference is just reading out what was learned during training.

The new model: intelligence emerges from search processes during inference. The weights provide a base capability, but the real work happens when the model explores reasoning space.

This is a paradigm shift. It means models can be "smarter at inference time" than their training would suggest, if you give them enough compute to search thoroughly.

2. There's a Minimum Capability Threshold

Not all models benefit equally from test-time compute scaling. You need a base model that's already competent at multi-step reasoning. Below that threshold, extended thinking doesn't help—the model just generates longer nonsense.

But above the threshold, scaling is robust. This suggests:

- For weaker models: invest in more training

- For strong models: invest in more inference compute

The transition point seems to be somewhere around GPT-3.5/GPT-4 level capability. Below that, scale training. Above that, you have a choice.

3. Search Requires Verifiers

Extended reasoning only works if the model can evaluate its own reasoning steps. This requires:

- Self-verification capability (checking if reasoning makes sense)

- Backtracking when reasoning leads to contradictions

- Alternative path generation when initial approaches fail

These are learned behaviors, trained into the model through process supervision. But once present, they enable effective search through reasoning space.

4. The Economic Sweet Spot Is Dynamic

Different problems warrant different compute allocation. Simple questions should get minimal thinking time. Hard problems should get extended deliberation.

This means optimal inference systems need:

- Difficulty estimation: predict how hard a problem is

- Dynamic compute allocation: assign reasoning time based on difficulty

- Early stopping: halt reasoning when confidence reaches threshold

The future isn't "think for 5 minutes on everything"—it's "think for however long this specific problem needs."

Why This Took So Long to Discover

If test-time compute scaling works so well, why didn't it happen earlier? Several factors:

Model Capability Gates

Earlier models (pre-GPT-4) weren't capable enough to benefit. Asking GPT-2 to "think harder" doesn't help because it can't do multi-step reasoning reliably.

There's a minimum capability threshold where search becomes productive. OpenAI only crossed that threshold recently.

Training for Reasoning

Models need to be explicitly trained to reason step-by-step and verify their own steps. This requires:

- Process supervision datasets (expensive to create)

- Reinforcement learning from reasoning traces (technically complex)

- Verification mechanisms (non-trivial to implement)

These techniques matured slowly. Process supervision only became practical around 2023.

Paradigm Lock-In

The field was organized around "scale the model" as the primary lever. Research funding, infrastructure investment, and competitive dynamics all reinforced that paradigm.

Test-time compute scaling required questioning that paradigm. It required saying: "Maybe we've been scaling the wrong thing."

Economic Mis-Incentives

Under the old model, inference was optimized for cost and speed—not quality. Companies wanted fast, cheap answers. Inference infrastructure was designed accordingly.

The idea that you'd intentionally slow down and make inference more expensive to get better answers violated the economic assumptions.

OpenAI could only pursue this because they had both:

- The technical capability (strong enough base models)

- The economic positioning (premium users willing to pay for quality)

What's Next: Speculative Trajectory

The progression from o1 to o3 suggests several near-future developments:

Hybrid System-1/System-2 Architectures

Models that combine fast, intuitive responses with slow, deliberative reasoning. Simple queries trigger System-1 (fast, cheap). Complex problems trigger System-2 (slow, deep).

This mirrors human cognition: you don't consciously reason about every action, but you can when needed.

Learned Compute Allocation

Instead of users manually setting "high" or "low" reasoning effort, models learn to predict how much thinking a problem needs and allocate accordingly.

This requires meta-learning: learning to estimate problem difficulty and the returns to additional reasoning time.

Inference-Time Learning

If models can reason about problems, they can potentially learn from their own reasoning traces. This opens the door to:

- Few-shot learning during inference: showing the model examples and having it learn a new task on the fly

- Self-improvement loops: the model reasons, checks its reasoning, learns from errors, and reasons better

- Personalization: adapting to individual users through accumulated inference-time experience

Collaborative Reasoning

Multiple models (or multiple instances of one model) working together:

- Generating diverse reasoning paths in parallel

- Cross-checking each other's work

- Debating interpretations until convergence

This is inference-time ensembling, but smarter—not just averaging outputs, but actually having models reason collaboratively.

The Coherence Interpretation

From AToM's perspective, the progression from o1 to o3 demonstrates something fundamental: coherence construction takes time and compute.

When a model engages in extended reasoning, it's not just "thinking harder"—it's searching through coherence space. Each reasoning path is a potential trajectory. Most paths lead to inconsistency (the math doesn't work, the logic contradicts itself, the conclusion doesn't follow). These get pruned.

The paths that survive are coherent: they maintain consistency across steps, integrate all constraints, resolve apparent contradictions.

The longer the search, the more thoroughly the model explores coherence space, and the more likely it is to find high-quality solutions. This is exactly what AToM predicts: meaning emerges from coherence, and coherence emerges from search.

The scaling law is geometric: more compute allows more thorough search through reasoning space, which finds more coherent (and therefore more correct) solutions.

This isn't magic. It's what every coherent system does. Your own thinking works the same way—you consider possibilities, check them for consistency, backtrack when they fail, and converge on interpretations that make sense across multiple frames.

Test-time compute scaling is that process formalized in silicon.

This is Part 2 of the Test-Time Compute Scaling series.

Previous: The New Scaling Law: Why Thinking Harder Beats Training Bigger

Next: Chain of Thought on Steroids: The Mechanics of Extended Reasoning

Further Reading

- OpenAI (2024). "Learning to Reason with LLMs." OpenAI Blog.

- Cobbe, K., et al. (2023). "Training Verifiers to Solve Math Word Problems." arXiv preprint.

- Wei, J., et al. (2022). "Chain-of-Thought Prompting Elicits Reasoning in Large Language Models." NeurIPS.

- Snell, C., et al. (2024). "Scaling LLM Test-Time Compute Optimally can be More Effective than Scaling Model Parameters." arXiv preprint.

Comments ()