Hierarchical Active Inference: Scaling to Complex Tasks

Hierarchical Active Inference: Scaling to Complex Tasks

Series: Active Inference Applied | Part: 8 of 10

You're making coffee. Your hand reaches for the pot. Your fingers wrap around the handle. Muscles contract in sequence, joints articulate, neurons fire in cascades. But you don't think about any of that. You think "make coffee" and somehow your body translates that high-level intention into thousands of coordinated low-level actions.

This is the fundamental problem any intelligent system faces: how to bridge the gap between abstract goals and concrete actions. In active inference terms, it's the problem of temporal depth—how far into the future should an agent plan, and at what level of granularity?

The answer, it turns out, is to not pick a level at all. Build a hierarchy.

The Temporal Binding Problem

Classical active inference, as we've explored in this series, operates by minimizing expected free energy over some planning horizon. The agent builds a generative model of how the world works, uses it to predict what will happen under different action sequences, and selects actions that minimize surprise while seeking information.

But there's a catch. The deeper you plan—the further into the future you project—the more computationally expensive inference becomes. A planning horizon of T timesteps requires evaluating T factorial possible action sequences in the worst case. Even with clever message passing algorithms, this explodes rapidly.

More fundamentally, not all decisions operate at the same timescale. When you're navigating a city, you don't plan every muscle contraction required to walk to your destination. You think in terms of waypoints: "head to the coffee shop, then the bookstore, then home." Your navigation system operates at a coarse timescale (minutes to hours), while your motor system handles fine-grained control (milliseconds to seconds).

This is what researchers call the temporal binding problem: how to coordinate planning and control across multiple timescales simultaneously. And the solution that evolution discovered—and that we're now reverse-engineering into artificial agents—is hierarchy.

Multi-Scale Active Inference Architectures

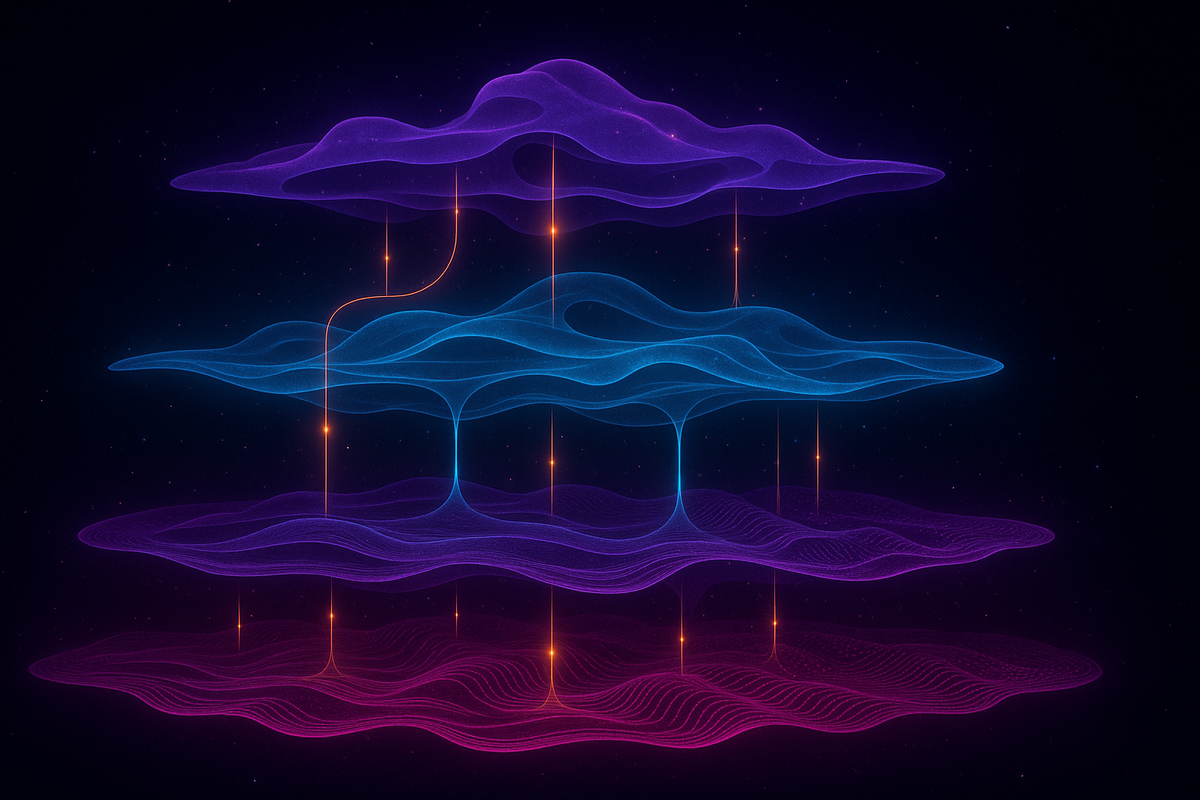

A hierarchical active inference agent isn't one model. It's a stack of models, each operating at a different temporal resolution, each providing context to the level below and abstraction to the level above.

Consider the canonical three-level architecture proposed by Friston and colleagues:

Level 1: Abstract, Slow Policy — Plans over long horizons (hours to days) in terms of high-level states. "Today I will: work on the paper, go to the gym, have dinner with friends." The state space is compact—just a few discrete options—so planning is tractable even over extended timescales.

Level 2: Intermediate Plans — Translates abstract intentions into sequences of subgoals. "To work on the paper, I need to: review notes, draft section 3, run the analysis, write the discussion." Operates at the timescale of minutes to hours.

Level 3: Low-Level Control — Executes specific motor commands to achieve immediate subgoals. "To draft section 3, position hands on keyboard, type characters in sequence." Operates at milliseconds to seconds.

The crucial insight is that each level is solving the same problem—minimizing expected free energy—but over different state spaces and different temporal horizons. The higher levels provide prior beliefs to the lower levels, constraining their inference. The lower levels provide prediction errors to the higher levels, signaling when the abstract plan needs revision.

This is active inference all the way up and all the way down. But instead of a single monolithic model trying to plan everything at once, you have a cascade of specialized models, each handling the complexity appropriate to its scale.

Precision-Weighted Hierarchical Control

But how exactly do these levels communicate? How does a high-level intention like "work on the paper" get translated into the precise motor commands needed to type?

The answer lies in precision weighting—a mechanism that's become central to understanding hierarchical processing in both biological and artificial systems.

In active inference, precision is the inverse of uncertainty. When a prediction is precise (high precision weight), it's treated as trustworthy. When it's imprecise (low precision weight), it's downweighted during inference. This is how sensory attention works in the brain: you turn up the precision on relevant sensory channels, effectively amplifying their influence on belief updating.

In hierarchical active inference, precision weighting does something even more powerful: it determines the balance of control between levels.

When the high-level model is confident about what should happen next, it provides precise priors to the lower level. The lower level's job becomes constrained: "I don't care how you do it, but you will draft section 3 in the next hour." The low-level controller then fills in the details, executing whatever sequence of actions satisfies that prior.

But when the high-level model is uncertain—when the abstract plan encounters unexpected obstacles—it reduces the precision of its priors. This gives the lower levels more autonomy to explore and adapt. Eventually, the mismatch between what the high-level model predicted and what actually happened (the prediction error) propagates upward, triggering a revision of the higher-level plan.

This is how you can be "on autopilot" during routine activities (high-level priors are precise, low-level systems execute without much interference) but suddenly snap to conscious deliberation when something unexpected happens (precision shifts, higher levels engage).

Karl Friston describes this as precision engineering—the art of designing systems that dynamically allocate control authority across hierarchical levels based on context and uncertainty.

Why Hierarchy Works: The Curse of Dimensionality Broken

Why does hierarchy help with computational tractability? The answer is in the mathematics of state space compression.

Imagine you're planning a cross-country road trip. If you tried to plan every steering adjustment, every brake tap, every lane change for the entire journey, the state space would be astronomical. Even powerful computers couldn't search it exhaustively.

But if you think hierarchically, the problem decomposes:

At the highest level, you're choosing routes between cities. The state space is just the graph of highways. You can search this efficiently—it's a classic shortest-path problem.

At the next level down, you're navigating within a city: which streets to take, where to turn. The state space is larger, but still tractable because you're only planning a few miles at a time.

At the lowest level, you're controlling the vehicle moment-to-moment. The state space includes steering angle, throttle position, brake pressure—lots of continuous variables. But your planning horizon is short (seconds), so even though the state is high-dimensional, you're not projecting far into the future.

The key is that each level operates in a compressed representation. The high-level model doesn't track steering angles; it only tracks which city you're in. The mid-level model doesn't track muscle contractions; it only tracks waypoints. This compression is what makes deep planning tractable.

In information-theoretic terms, each level is performing lossy compression of the level below it, discarding details that don't matter at longer timescales. Your high-level plan doesn't specify how you'll walk to the coffee shop because those details aren't relevant until you're actually executing that subgoal.

This is exactly how the brain seems to work. The prefrontal cortex maintains abstract representations of task structure. Premotor areas translate these into action sequences. Primary motor cortex handles fine-grained control. Each level abstracts away details from the level below, allowing for efficient planning at multiple scales simultaneously.

Learning Across Hierarchies: Where Do Abstractions Come From?

A crucial question remains: how does the agent learn what the right levels of abstraction are?

In early implementations of hierarchical active inference, the hierarchy was hand-designed. A human engineer would decide: "Level 1 represents cities, Level 2 represents streets, Level 3 represents motor commands." This works for simple, well-structured domains. But it doesn't scale to open-ended environments where the relevant abstractions aren't known in advance.

Recent work has focused on learning hierarchical generative models from experience. The idea is to let the agent discover for itself which high-level states are useful for prediction and planning.

One approach uses variational autoencoders (VAEs) at each level of the hierarchy. The high-level VAE learns a compressed latent representation of sensory sequences. This latent space becomes the state space for high-level planning. The mid-level VAE learns to predict transitions in the high-level latent space while maintaining its own finer-grained representation. And so on down the stack.

Another approach borrows from reinforcement learning: options or skills. An option is a temporally extended action—a reusable subroutine that the agent can invoke as a single decision. "Navigate to location X" becomes a single high-level action, even though it involves hundreds of low-level motor commands.

The key insight is that useful abstractions are those that chunk together correlated events. If certain low-level state transitions reliably happen together, they should be represented as a single high-level event. This is compression via the discovery of temporal structure.

Mathematically, this is related to the idea of information bottlenecks: you want the high-level representation to retain only the information about the past that's relevant for predicting the future, discarding everything else. This naturally leads to hierarchical factorization of the generative model.

The result is a system that learns to "think" at multiple levels of abstraction, automatically discovering the timescales and granularities that are useful for navigating its environment. This is starting to look like genuine cognitive architecture—not just an engineering trick, but a principled solution to the problem of bounded rationality.

Hierarchical Active Inference in the Wild

Where has this actually been implemented? What can hierarchical active inference agents currently do?

Robotic manipulation — Researchers at UCL and the Max Planck Institute have built hierarchical active inference controllers for robotic arms. The high level plans which object to grasp and where to place it. The mid-level translates this into a trajectory through workspace. The low level controls joint torques to follow the trajectory. The system adapts in real-time to perturbations, replanning at the appropriate level when predictions fail.

Autonomous navigation — Hierarchical active inference has been applied to self-driving scenarios. The top level chooses routes and goals. The middle level handles lane-keeping and obstacle avoidance. The bottom level controls acceleration and steering. Critically, the system doesn't need separate training for each level—it learns the full hierarchy end-to-end by minimizing expected free energy.

Compositional problem-solving — In discrete task domains like grid-world puzzles, hierarchical active inference agents learn to decompose complex goals into sequences of subgoals. This is the kind of compositional planning that classical RL struggles with. The hierarchy naturally discovers reusable task components without explicit reward shaping.

Neural modeling — Beyond engineering applications, hierarchical active inference has become a leading framework for understanding cortical organization. The hypothesis is that the brain's hierarchical structure—from sensory cortex to association areas to prefrontal cortex—implements precisely this kind of multi-scale active inference. Different regions operate at different temporal scales, with higher areas providing contextual priors and lower areas reporting prediction errors.

This isn't just theory. Empirical neuroscience increasingly supports the idea that cortical hierarchies encode precision-weighted predictions across multiple timescales. The canonical microcircuit—the repeating six-layered structure found throughout neocortex—may be the brain's implementation of one level in a hierarchical active inference architecture.

The Scalability Thesis

Here's the provocative claim: hierarchical active inference might be the only way to scale active inference to truly complex, open-ended tasks.

Flat active inference—a single model planning over a single timescale—hits computational limits quickly. Even with efficient message passing, planning over long horizons in high-dimensional state spaces becomes intractable. This is why early active inference demos were limited to simple grid worlds and toy problems.

Hierarchy breaks the curse of dimensionality by factorizing the planning problem. Instead of searching one enormous space, you search multiple smaller spaces in sequence. The total computational cost scales much more favorably—often linearly with the depth of the hierarchy rather than exponentially with the planning horizon.

But there's a deeper sense in which hierarchy is necessary. Complex behavior isn't just about long-horizon planning. It's about compositionality—the ability to combine learned primitives in novel ways to solve new problems.

Hierarchical active inference naturally supports compositional generalization. The high-level model learns a vocabulary of reusable subgoals. The mid-level model learns how to achieve those subgoals in different contexts. The low-level model learns robust motor primitives. When faced with a new task, the agent can recombine these pieces without relearning from scratch.

This is how humans navigate the world. You don't learn "making coffee at home" and "making coffee at a friend's house" as separate tasks. You learn abstract procedures—"heat water," "grind beans," "pour and steep"—that generalize across contexts. Hierarchy gives you this for free.

In AToM terms, this is coherence across scales. The agent maintains self-consistency (minimizing free energy) at multiple levels of organization simultaneously. High-level plans cohere with mid-level actions cohere with low-level control. When this coherence breaks—when prediction errors exceed thresholds—the system restructures, revising its model at the appropriate level.

Meaning, remember, is coherence over time: M = C/T. A hierarchical agent maintains meaning across multiple timescales simultaneously. It's not just reacting to immediate sensory input; it's pursuing goals that unfold over seconds, minutes, hours. This is what makes behavior genuinely intelligent rather than merely reactive.

Open Questions and Future Directions

Despite recent progress, hierarchical active inference remains more a research program than a mature engineering framework. Several fundamental questions remain open:

How many levels? — Biological brains have many levels of cortical hierarchy. How deep should an artificial agent's hierarchy be? Is there a principled way to determine the optimal depth for a given task, or is it always domain-specific?

How to learn the hierarchy? — Current approaches to learning hierarchical generative models are computationally expensive and data-hungry. Can we develop more efficient methods for discovering useful abstractions? Can we transfer learned hierarchies across tasks?

Precision dynamics — How should precision weights change over time and across levels? The theory says precision should track uncertainty, but how do you compute this efficiently in high-dimensional spaces? Approximations are needed, but which ones preserve the important properties?

Continuous vs discrete hierarchies — Should levels of the hierarchy be sharply delineated, or should abstraction vary smoothly across scales? Some evidence suggests the brain uses smooth gradients of abstraction rather than discrete levels. How would this work in practice?

Hierarchical exploration — How should curiosity and information-seeking behavior work in hierarchical systems? Should the agent explore at multiple levels simultaneously, or focus exploration at specific levels depending on context?

These are not just engineering challenges. They're questions about the nature of intelligence itself. How do you build a system that can think at multiple timescales, pursue multiple goals, adapt at multiple levels of abstraction? The answers will determine whether active inference can scale from toy problems to real-world complexity.

From Implementation to Architecture

What started as an engineering trick—"let's add hierarchy to make planning tractable"—has become a deep insight into cognitive architecture.

The brain isn't a single active inference agent. It's a society of active inference agents, each operating at a different scale, each pursuing its own imperative to minimize surprise, but coordinated through precision-weighted message passing up and down the cortical hierarchy.

This explains phenomena that were mysterious under flat models:

Why you can drive while having a conversation — different levels of the hierarchy handle different tasks, with attention (precision) allocated dynamically.

Why skill learning involves stages — initially, high-level areas micromanage low-level execution (high precision priors), but as skills become automatic, control is delegated to lower levels (precision relaxes).

Why abstract thought feels different from perception — it's literally happening in different parts of the hierarchy, at different temporal scales, in more compressed state spaces.

Why trauma disrupts coherent action across timescales — it's a failure of hierarchical integration, where prediction errors at low levels can't be resolved by high-level model revision.

Hierarchical active inference gives us a unified framework for understanding how complex, goal-directed behavior emerges from the interaction of multiple timescales and levels of abstraction.

And crucially, it's a framework we can implement. The robotics systems, the navigation agents, the compositional problem-solvers—these aren't simulations. They're real systems, in real environments, exhibiting genuinely intelligent behavior.

This is the promise of applied active inference: not just a theory of brain function, but an engineering blueprint for artificial minds.

Building Systems That Think Across Scales

The practical takeaway for anyone building active inference agents: if your task involves planning over multiple timescales, or coordinating abstract goals with concrete actions, you need hierarchy.

Design principles:

Start with the timescales — Identify the natural temporal structure of your task. What happens in milliseconds? What happens in minutes? What happens in hours? Each distinct timescale should have its own level in the hierarchy.

Compress upward — Each level should represent the level below it in a compressed form, retaining only information relevant for longer-term planning. Use dimensionality reduction techniques (VAEs, information bottlenecks) to discover these compressed representations.

Pass precision, not just predictions — Higher levels shouldn't just tell lower levels what to do; they should communicate how confident they are. Implement precision weighting so that control authority shifts dynamically based on uncertainty.

Allow bottom-up revision — When lower levels encounter persistent prediction errors, this should trigger replanning at higher levels. Build explicit pathways for error signals to propagate upward and revise abstract plans.

Learn the whole stack — Don't hand-code the hierarchy. Let the agent discover useful abstractions through experience. Train the full hierarchical model end-to-end to minimize expected free energy across all levels simultaneously.

This is harder than building flat agents. It requires careful architecture design, efficient inference algorithms, and substantial computational resources. But for tasks of genuine complexity—the kinds of tasks we actually care about—it may be the only way forward.

The coffee you made this morning required coordination across six levels of motor hierarchy, from abstract intention to individual muscle fibers. If we want artificial agents that can operate in the real world with anything approaching human competence, they'll need the same.

Hierarchy isn't a complication. It's the solution.

This is Part 8 of the Active Inference Applied series, exploring how to build artificial agents that minimize surprise through action.

Previous: Robotics and Embodied Active Inference

Next: Active Inference for Language Models: The Next Frontier

Further Reading

- Friston, K., et al. (2017). "Deep temporal models and active inference." Neuroscience & Biobehavioral Reviews.

- Parr, T., & Friston, K. J. (2018). "The Anatomy of Inference: Generative Models and Brain Structure." Frontiers in Computational Neuroscience.

- Çatal, O., et al. (2021). "Learning Generative State Space Models for Active Inference." Frontiers in Computational Neuroscience.

- Pezzulo, G., et al. (2018). "Hierarchical Active Inference: A Theory of Motivated Control." Trends in Cognitive Sciences.

- Tschantz, A., et al. (2020). "Learning action-oriented models through active inference." PLOS Computational Biology.

- Champion, T., et al. (2021). "Realizing Active Inference in Variational Message Passing: the Outcome-Blind Certainty Seeker." Neural Computation.

Comments ()