Information Cascades

In 1992, economists Sushil Bikhchandani, David Hirshleifer, and Ivo Welch published a paper that explained why everyone suddenly believes the same thing—even when the thing is wrong.

Their model was called "information cascades," and it solved a puzzle that had haunted economics for decades: how can markets, crowds, and populations arrive at wildly incorrect consensus? Not through stupidity. Not through manipulation. But through rational individual choices that aggregate into collective delusion.

The answer was both elegant and disturbing. Under certain conditions, the rational thing to do is ignore your own information and copy others. And when everyone does this, the group converges on whatever belief happened to get established early—regardless of whether it's true.

The Urn Experiment

The classic cascade experiment works like this:

There's an urn containing three balls. Either two are red and one is blue, or two are blue and one is red. You don't know which. Your goal is to guess the urn's composition.

You draw one ball, observe its color privately, and then announce your guess to the room. But here's the key: you also hear everyone else's guesses before you announce yours.

Suppose the urn is actually two blue, one red. The first person draws a ball. Let's say they get blue. Their best guess: the urn is majority blue. They announce "blue."

The second person draws. They also get blue. They agree—the urn is probably blue. They announce "blue."

The third person draws. They get... red. What should they guess?

Here's the cascade: the third person has seen two blue guesses. Their private information (one red ball) suggests the urn might be red. But the public information (two people guessed blue) suggests it's more likely blue. Rationally, the third person should weight the public information more heavily. They announce "blue."

The fourth person draws. Let's say they also get red. They've now seen three "blue" guesses and drawn a red ball. The public signal still outweighs their private signal. They announce "blue."

And so it cascades. The first two draws happened to be blue, so everyone after them rationally ignores their private information and copies the public consensus. Even though the urn actually contains more red balls than the string of guesses suggests.

The Fragility of Truth

The urn experiment reveals something profound about collective belief: the truth can lose even when the evidence favors it.

In the cascade, the early draws determined the outcome. If the first two people had happened to draw red instead of blue, the cascade would have gone the opposite direction—and would have been equally "locked in" regardless of the underlying reality.

This means that beliefs at scale depend enormously on initial conditions. Early evidence gets amplified. Late evidence gets ignored. The sequential structure of information revelation creates path dependency that can detach consensus from truth.

Worse, cascades are informationally inefficient. After the first few people have announced, everyone is just copying. No new information enters the system. A million people could participate, and the collective answer would still reflect only the first few draws.

This is why polls can be wrong, why markets can misprice, why entire populations can believe things that turn out to be false. It's not that people are irrational. It's that the aggregation structure produces irrational outcomes from rational individual choices.

Cascade Dynamics in the Wild

The urn experiment is stylized, but cascade dynamics show up everywhere.

Restaurant choices. You're in an unfamiliar city looking for dinner. You see two restaurants side by side—one empty, one crowded. Which do you choose? Rationally, the crowded one. Other diners presumably know something you don't. But those other diners made the same inference about the diners before them. The crowd is evidence of... the crowd. The early random diners determined the outcome; the late diners are just cascading.

Stock markets. Prices reflect aggregated information—but the aggregation structure creates cascades. Early trades move prices, which signal to later traders, who trade based partly on the price movement. If early trades happen to push the price up, later traders rationally infer that others know something, and buy. The price rises further. The cascade can detach completely from fundamentals.

Academic citations. Early citations to a paper signal quality, which generates more citations, which generates more. Papers that happen to get cited early get cited more—not because they're better, but because citations generate citations. Science itself runs on cascade dynamics.

Political opinion. Early polls shape later voter behavior. A candidate who polls well in early states gets momentum—not necessarily because they're preferred, but because voters infer that others know something. The cascade can make early polling leads self-fulfilling.

In each case, the pattern is the same: rational individuals make inferences from others' behavior, which generates behavior for still others to make inferences from. The loop feeds itself, and the direction it feeds depends on initial conditions, not underlying truth.

The Difference from Contagion

Information cascades look like social contagion but operate differently.

In emotional contagion, the transmission mechanism is direct: you catch the emotion from exposure. Your mood changes because you perceived someone else's mood.

In information cascades, the transmission mechanism is inferential: you update your beliefs based on what others' behavior reveals about their beliefs. You're not catching their belief—you're rationally inferring from it.

This distinction matters because the interventions differ. Emotional contagion might be reduced by limiting exposure. Information cascades can't be fixed by exposure limits—the cascading happens because people are correctly reasoning from limited information. The problem isn't that they're influenced. The problem is that they're rationally influenced by a signal that doesn't contain much information.

It also matters because cascades are fragile in a way that contagion isn't. A cascade can shatter if new information contradicts the consensus and that information becomes public. Suddenly everyone's private information resurfaces. The cascade reverses.

Emotional contagion is stickier. Once you've caught a mood, contradictory information doesn't automatically undo it. The mood persists independent of its origin.

Real-world beliefs usually involve both mechanisms. You catch the emotional coloring of a belief (contagion) and you update toward consensus because others seem to believe it (cascade). The two reinforce each other.

Herding: The Behavioral Economist's Version

Economists like to talk about "herding"—a broader category that includes cascades but extends to situations where people copy others even without rational inference.

Reputation herding: Fund managers follow consensus because deviating is career-risky. If you make a contrarian bet and lose, you're fired. If you follow the herd and lose, well, everyone lost. The incentive structure rewards conformity independent of truth.

Payoff externalities: Some behaviors are more valuable when others adopt them too. Joining a social network, adopting a technology standard, using a particular language. You herd not because you're inferring from others, but because others' choices literally change the payoffs.

Sanction avoidance: In many environments, deviating from consensus triggers punishment. Social disapproval, exclusion, legal consequences. You herd to avoid sanctions, not because you believe the herd is right.

Cognitive economy: Thinking is expensive. Copying is cheap. When the stakes are low, it makes sense to just follow what others are doing rather than independently investigate. You herd to save effort.

All of these produce cascade-like dynamics without the rational-inference mechanism. The result is the same: collective convergence that may or may not track truth, path-dependent outcomes, fragility to shocks.

Breaking Cascades

If cascades can detach from truth, how do we get them back?

Diversity of initial conditions. Cascades depend on early signals. If early signals are diverse—different people getting different information and acting independently—the cascade is less likely to lock in early.

Simultaneous revelation. If everyone reveals their private information at once, rather than sequentially, cascades can't form. You can't copy others because you don't know their choice until after you've made yours. This is the logic behind secret ballots and sealed-bid auctions.

Incentivizing contrarianism. Rewards for being independently right (rather than collectively right) can sustain private information disclosure. Prediction markets try to do this—you profit by being correct, not by being consensus.

Public disclosure of private signals. If private information becomes public before choices are made, everyone can condition on the full evidence set. The cascade dissolves because there's nothing to cascade from.

Institutional skepticism. Some institutions deliberately cultivate devil's advocates, red teams, or contrarian voices. The goal is to prevent early consensus from hardening before all information is surfaced.

None of these solutions are costless. Simultaneous revelation is often impractical. Contrarian incentives can create their own pathologies (professional contrarianism, trolling). Public disclosure may not be possible when information is costly to acquire or verify.

But the diagnosis is clear: cascades are a failure mode of sequential information aggregation. Fixing them requires changing how information is revealed and how inferences are made.

The Internet as Cascade Machine

Social media platforms are nearly perfectly designed to generate information cascades.

Information revelation is highly sequential—you see what others posted before you, and your post influences what others see. Engagement metrics create salient signals (likes, shares, ratios) that reveal what others appear to believe. The speed of transmission is fast enough that cascades can form before contradicting information surfaces.

Moreover, platforms create what look like public signals but are actually private signals dressed up. You see what the algorithm shows you, which isn't the same as what everyone sees. This means you're inferring from "public opinion" that's actually a tailored sample—creating cascades from non-representative data.

The result is cascade turbulence: cascades forming and breaking rapidly, beliefs lurching from consensus to consensus, fragile equilibria shattering whenever new viral content emerges. The system never settles because the information environment never stabilizes.

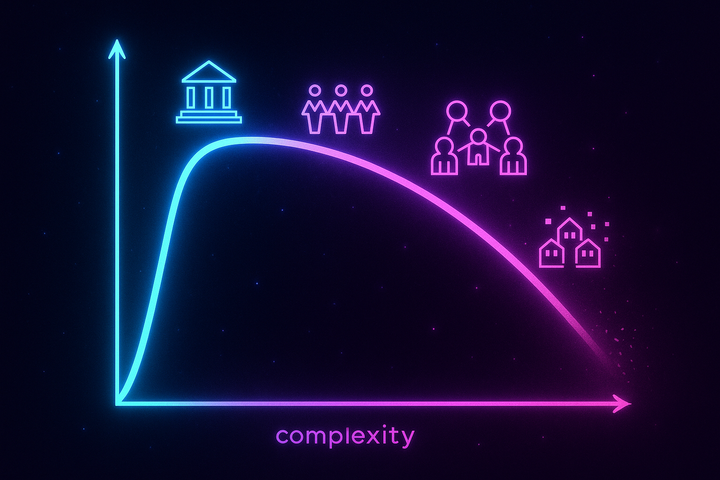

The Wisdom of Crowds—Conditionally

James Surowiecki's The Wisdom of Crowds argued that aggregated independent judgments can be remarkably accurate. The averaging effect cancels out individual errors, leaving collective wisdom.

But the key word is independent. The wisdom-of-crowds effect requires that individuals make their judgments without observing others. Once they start observing and inferring, cascade dynamics kick in. Errors no longer cancel—they compound.

This is why betting markets work better than polls, why secret ballots are more accurate than hand-raising, why independent expert panels outperform deliberating committees. Independence preserves the diversity that makes aggregation work. Observation destroys it.

The tragedy is that most natural social environments involve observation. We see what others think. We infer from their behavior. We cascade. The conditions for wise crowds are artificial; the conditions for cascades are default.

Cascade Warfare

If cascades can form around arbitrary early signals, then manipulating those early signals becomes strategically valuable.

This is, essentially, what happened to political information online. Bad actors learned that they could seed early signals—fake accounts, bot networks, coordinated posting—and let cascades do the rest. You don't need to persuade millions of people. You just need to create the appearance of consensus early enough that the cascade forms naturally.

The first rule of information warfare in the cascade age: you don't need to change minds. You need to change what people think other minds believe.

Humans are social creatures. We take cues from the herd. If the herd appears to believe something, we rationally update toward it. So the vector of attack isn't persuasion—it's perception of consensus.

This explains the obsession with follower counts, like ratios, trending hashtags. These are cascade signals. They tell you what others appear to believe, which shapes what you believe, which shapes what still others appear to believe. Manipulating these signals is manipulation of the cascade—far more efficient than trying to argue anyone into anything.

The Recursive Hall of Mirrors

Here's where it gets properly strange: cascades can form around what people think about what others think.

In a classic cascade, you infer from others' behavior what they believe about the world. But you can also infer what they believe about what others believe. And what they believe about what others believe about what others believe. The layers of inference stack.

Financial markets exhibit this pathology. You don't just ask "what's the true value of this stock?" You ask "what do other traders believe the value is?" And then "what do other traders believe other traders believe?" The actual fundamentals become irrelevant; the game is entirely about expectations of expectations.

This is Keynes's famous "beauty contest" problem: you're not voting for who you think is most beautiful, but for who you think others will vote for. And everyone else is doing the same. The result can be a stable equilibrium around something that no one actually believes is beautiful, but that everyone believes everyone else believes is beautiful.

In a cascade, you can be locked into believing something that no one in the system actually believes—because everyone is inferring from everyone else's inferences, and the chain has no anchor to reality.

The Takeaway

Information cascades explain why everyone can believe the same wrong thing without anyone being stupid or evil.

The mechanism is simple: when you rationally infer from others' behavior, and others rationally infer from yours, the sequential structure creates path dependency. Early signals get amplified. Late signals get ignored. Consensus can detach from truth.

This is happening all around you, all the time. In markets, in politics, in science, in your social media feed. People are copying people who copied people, in chains stretching back to initial conditions that may have been arbitrary.

You're not thinking independently. You're cascading. The question is whether you can recognize it, and whether—knowing this—you can find any way to stop.

Further Reading

- Bikhchandani, S., Hirshleifer, D., & Welch, I. (1992). "A theory of fads, fashion, custom, and cultural change as informational cascades." Journal of Political Economy. - Banerjee, A. V. (1992). "A simple model of herd behavior." Quarterly Journal of Economics. - Surowiecki, J. (2004). The Wisdom of Crowds. Doubleday.

This is Part 5 of the Network Contagion series. Next: "Network Topology: Small Worlds"

Comments ()