Inoculation Theory: Prebunking Works

In 1961, psychologist William McGuire asked a simple question: if vaccines protect against biological viruses by exposing people to weakened pathogens, could the same principle protect against persuasion?

His experiments showed that it could. People exposed to weak versions of arguments—and given practice refuting them—became more resistant to full-strength persuasion later. The analogy to vaccines was surprisingly precise.

McGuire called this "inoculation theory." Sixty years later, it's become one of the most promising tools for defending against misinformation. Prebunking—exposing people to manipulation techniques before they encounter them—works better than debunking after the fact.

The Biological Analogy

The immune system works by recognizing threats. When you're vaccinated, you're exposed to a weakened version of a pathogen. Your immune system learns to recognize it. When you encounter the real pathogen later, your defenses are already primed.

McGuire proposed that attitudes work similarly. When you hold beliefs without ever having them challenged, they're vulnerable—like an immune system that's never seen a pathogen. But if you're exposed to weak challenges and practice defending against them, your beliefs become more resistant to stronger attacks.

The key insight: passive acceptance of information creates vulnerability. Active engagement with challenges creates resistance.

This ran counter to the common assumption that the best way to protect beliefs was to shield them from opposing views. McGuire showed the opposite: controlled exposure to opposition strengthened beliefs more than sheltering them.

The Inoculation Components

Effective inoculation has two components:

Threat. Make people aware that their beliefs might be attacked. This motivates defensive processing. Without perceived threat, people don't generate counterarguments.

Refutational preemption. Expose people to a weakened version of the attack and show how to refute it. This builds the "antibodies"—the counterarguments that can be deployed against future attacks.

Both components matter. Threat alone makes people anxious but not more resistant. Refutation alone doesn't motivate defensive engagement. Together, they create lasting resistance.

The analogy to vaccines continues: the dose matters. Too weak an exposure doesn't trigger defense. Too strong an exposure can backfire—persuading rather than inoculating. The "weakened form" must be carefully calibrated.

From Theory to Application

For decades, inoculation theory remained mostly academic. Then the internet happened.

The scale and speed of misinformation made debunking—correcting false claims after they spread—inadequate. By the time a fact-check appeared, millions had already seen and shared the lie. The "debunking" often amplified the original claim through repetition.

Researchers began exploring prebunking—inoculating people against manipulation techniques before they encountered specific false claims.

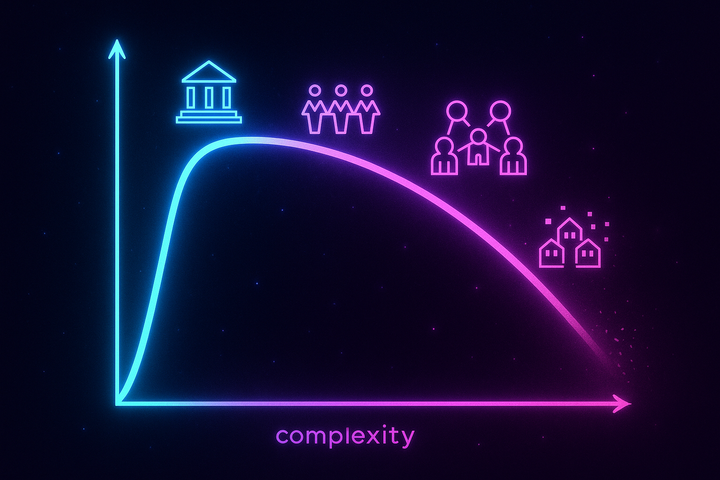

The shift was crucial: instead of playing whack-a-mole with individual lies, inoculation targets the techniques that make lies persuasive. Teach people to recognize emotional manipulation, and they're resistant to any claim that uses it—not just the specific claims you've debunked.

The Cambridge Research

A team at Cambridge University, led by Sander van der Linden, developed some of the most rigorous prebunking interventions.

Their approach inoculates against manipulation techniques rather than specific claims:

Emotional language. Teach people to recognize when language is designed to trigger outrage rather than inform. Once you see the technique, you're more resistant to it.

False experts. Teach people to verify credentials and recognize manufactured authority. Fake experts are a common manipulation tool; inoculation helps spot them.

Conspiracy logic. Teach people to recognize the structural features of conspiracy thinking—unfalsifiability, pattern-seeking, distrust of official sources. Understanding the form makes specific conspiracies less persuasive.

Polarization tactics. Teach people to recognize us-vs-them framing designed to divide. Awareness of the technique reduces its effectiveness.

The Cambridge team developed games—like "Bad News" and "Go Viral"—that let people practice creating misinformation. By understanding how manipulation works from the inside, players became more resistant to it from the outside.

Results were striking: 15-minute interventions produced significant, lasting resistance to manipulation. The effect worked across political lines—liberals and conservatives both became more resistant when inoculated.

Why Prebunking Beats Debunking

Several factors make prebunking more effective than debunking:

Timing. Prebunking happens before exposure; debunking happens after. By the time debunking arrives, the false belief may already be entrenched. First impressions are sticky.

Repetition. Debunking often requires repeating the false claim to refute it. But repetition increases familiarity, and familiarity increases belief. Debunking can accidentally reinforce the lie.

Motivated reasoning. People resist information that contradicts existing beliefs. Debunking triggers defensive reactions. Prebunking happens before beliefs form, avoiding this resistance.

Scalability. Debunking requires addressing each claim individually—an infinite task. Prebunking targets techniques that appear across claims—a finite task.

Engagement. Prebunking involves active practice and engagement. Debunking is passive information delivery. Active learning produces stronger, more durable effects.

This doesn't mean debunking is useless. Corrections matter, especially for people actively seeking accurate information. But prebunking is the more promising large-scale intervention.

The YouTube Experiment

In 2022, Google collaborated with researchers to test prebunking at scale. They ran short inoculation videos as pre-roll ads on YouTube, reaching millions of users.

The videos—90 seconds each—explained manipulation techniques like emotional language, false dichotomies, and scapegoating. They didn't address specific political claims; they taught recognition of persuasion tactics.

Results: viewers who saw the inoculation videos were better at identifying manipulative content and more resistant to it. The effect was significant and consistent across demographics.

This was a proof of concept: inoculation can work at internet scale. The same infrastructure that spreads misinformation can spread resistance to it.

The Limits

Inoculation isn't a cure-all:

Decay. The protection fades over time without reinforcement. "Booster shots"—repeated exposure to inoculation content—help maintain resistance.

Motivated reasoning. People who strongly want to believe something may resist inoculation for beliefs they're committed to. Inoculation works best on people who aren't already deeply invested.

Technique evolution. As people learn to recognize manipulation techniques, manipulators adapt. The arms race continues.

Backfire potential. If inoculation is perceived as partisan—as "the other side trying to manipulate me"—it can backfire. Framing matters.

Coverage gaps. Inoculation works for people who encounter it. Reaching everyone is impossible. The most vulnerable may be least likely to encounter prebunking content.

These limits don't negate the approach—they define its scope. Inoculation is a tool, not a solution.

The Educational Challenge

If prebunking works, why isn't it standard curriculum?

Partly inertia. Educational systems change slowly. Media literacy courses exist but are fragmented and underfunded. The science of inoculation is recent enough that it hasn't penetrated pedagogy.

Partly political. Teaching manipulation recognition is inherently contested. Whose manipulation gets taught? Conservative critics worry about liberal bias; liberal critics worry about false balance. The techniques are neutral, but examples are political.

Partly structural. Schools prepare students for tests, and manipulation recognition isn't on tests. Teachers lack time and training. The incentives don't align with the need.

And partly the platforms themselves resist. Teaching people to recognize manipulation threatens engagement-driven business models. Platforms have shown limited enthusiasm for making users more resistant to their own attention-capture techniques.

This creates a paradox: we know how to build resistance, but we lack the institutional will to deploy it at scale. The knowledge exists; the implementation doesn't.

Finland offers a model. After Russian disinformation campaigns targeted their elections, Finland integrated media literacy and manipulation recognition throughout their curriculum—starting in primary school. Finnish citizens consistently rank among the most resistant to disinformation in Europe.

It can be done. It just requires deciding that it matters.

DIY Inoculation

You can inoculate yourself:

Study manipulation techniques. Learn the classics: emotional appeals, false authority, scarcity, social proof. Learn the modern additions: bot amplification, coordinated inauthentic behavior, algorithmic manipulation. Understanding the techniques helps you recognize them.

Practice creating propaganda. Understanding how it's made improves detection. The "Bad News" game and similar tools let you experience the creator's perspective.

Engage with weak opposition. Don't just consume confirming information. Seek out the best versions of opposing arguments. Practice refuting them. This strengthens your positions and reveals weaknesses.

Cultivate epistemic humility. Accept that you're susceptible to manipulation. Everyone is. The belief that you're immune is itself a vulnerability.

Share techniques, not just debunks. When you see manipulation, explain the technique rather than just calling it false. Help others develop recognition skills.

This is defensive epistemology—building your mind's immune system through controlled exposure to the pathogens that want to infect it.

The Takeaway

Inoculation theory shows that prebunking—exposing people to weakened manipulation techniques before they encounter the real thing—builds resistance more effectively than debunking after the fact.

The biological analogy is precise: controlled exposure creates antibodies. Sheltering beliefs makes them vulnerable; challenging them makes them strong.

Modern applications target manipulation techniques rather than specific claims—emotional language, false authority, conspiracy logic, polarization tactics. This scales better than addressing individual lies.

The science is clear: you can be vaccinated against manipulation. The question is whether the vaccination happens by your choice, through education and deliberate exposure—or whether your natural defenses are overwhelmed because you never built them.

Further Reading

- McGuire, W. J. (1961). "The effectiveness of supportive and refutational defenses in immunizing and restoring beliefs against persuasion." Sociometry. - Roozenbeek, J., & van der Linden, S. (2019). "Fake news game confers psychological resistance against online misinformation." Palgrave Communications. - Lewandowsky, S., & van der Linden, S. (2021). "Countering misinformation and fake news through inoculation and prebunking." European Review of Social Psychology.

This is Part 5 of the Propaganda and Persuasion Science series. Next: "How Lies Spread"

Comments ()