How Lies Spread

In 2018, researchers at MIT published the largest study ever conducted on the spread of true and false news online. They analyzed 126,000 stories shared by 3 million people over a decade on Twitter.

The findings were stark: False news spread further, faster, deeper, and more broadly than true news in every category.

False stories were 70% more likely to be retweeted than true ones. True stories took six times longer to reach 1,500 people. False political news spread even faster than other categories of falsehood.

The researchers controlled for bots. The effect remained. Humans, not bots, were the primary drivers of false news spread.

This research crystallized something many suspected: the information ecosystem is structurally biased toward falsehood.

Why Lies Win

The MIT study examined why false news spreads better. Their findings:

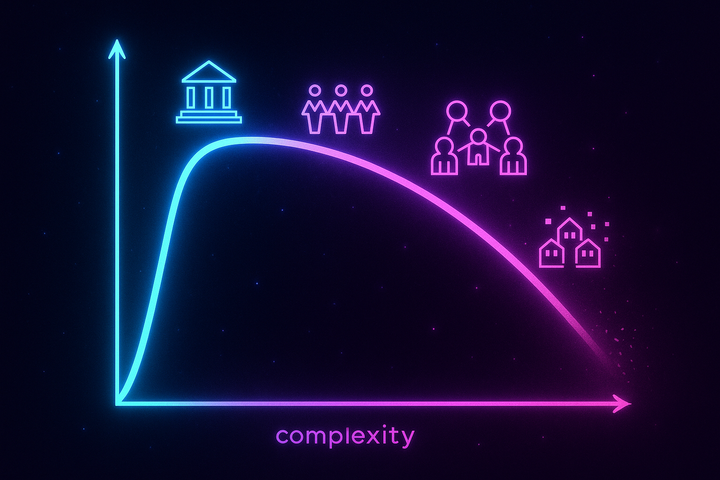

Novelty. False news is more novel than true news. True news is often predictable—it confirms existing knowledge. False news is surprising—it tells you something unexpected. We're drawn to novelty; lies deliver more of it.

Emotional response. False news triggered different emotional reactions than true news. It generated more surprise and disgust. These emotions drive sharing. We pass along what shocks us.

Simpler stories. False claims are often simpler than true ones. Reality is complicated; lies can be clean narratives. Simple stories spread more easily than complex ones.

This creates a disturbing selection pressure. If lies have structural advantages in the attention economy, the information ecosystem selects for falsehood the way evolution selects for fitness.

The platform is the environment. What survives is what spreads. What spreads is what triggers engagement. What triggers engagement is often false.

The Architecture Problem

Social media platforms were designed for engagement, not truth. Their architecture creates the conditions for misinformation to thrive:

Algorithmic amplification. Content that gets engagement gets promoted. Lies that generate outrage get more engagement than truths that generate indifference. The algorithm amplifies the lies.

Frictionless sharing. Sharing is easy—one click. There's no pause for verification. The speed of sharing outpaces the speed of checking. Lies can circumnavigate the globe before the truth has its boots on.

Social proof at scale. When content shows high share counts, it signals credibility. But share counts don't track truth—they track spreadability. Social proof becomes evidence of nothing.

Filter bubbles. Algorithmic personalization creates environments where you only see confirming information. Lies that fit your worldview appear validated by apparent consensus.

Attention metrics as success. Journalists and media organizations are evaluated on engagement. This creates incentives to craft content that spreads—not content that's most accurate or important.

The platforms aren't neutral pipes. They're environments with selection pressures. And those selection pressures favor misinformation.

The Debunking Problem

If lies spread faster than truth, corrections face a uphill battle.

The illusory truth effect. Repeated exposure to a claim increases belief in it, regardless of its truth value. Debunking requires repeating the lie to refute it—which inadvertently reinforces it.

Familiarity. By the time a correction appears, the lie has already spread. The lie is familiar; the correction is new. Familiarity breeds acceptance.

Motivated reasoning. People resist corrections that contradict their existing beliefs. Debunking can trigger defensive reactions that strengthen the false belief rather than weakening it.

The continued influence effect. Even when people acknowledge a correction, the original misinformation continues to influence their reasoning. Beliefs are sticky; corrections don't fully displace them.

Asymmetric reach. The original lie reaches millions. The correction reaches thousands. Most people who saw the lie never see the correction.

This is why debunking, while necessary, is insufficient. The structural advantages of lies mean corrections will always lag behind.

The Superspreaders

The MIT study found that most false news was spread by humans, not bots. But not all humans are equal spreaders.

Superspreaders exist for misinformation as for disease. A small number of accounts generate a disproportionate amount of false news spread. Studies have found that just a dozen accounts generate 65% of vaccine misinformation.

This has policy implications. Targeting superspreaders—through removal, demotion, or labeling—can disproportionately reduce misinformation spread. You don't need to address everyone; you need to address the hubs.

But superspreaders are often protected. Many are politicians, celebrities, or media figures with large followings and institutional protection. The platforms have been reluctant to apply consistent standards to high-profile accounts.

The result: the most protected accounts are often the most harmful ones.

The Types of Misinformation

Researchers distinguish several categories:

Misinformation. False information spread without intent to deceive. The sharer believes it's true. Think of your aunt forwarding a health myth she genuinely believes.

Disinformation. False information spread with intent to deceive. The creator knows it's false. Think of state-sponsored propaganda operations.

Malinformation. True information shared with intent to harm. Think of doxing or selectively leaked private information.

The categories matter because they suggest different interventions. Misinformation might be addressed through education. Disinformation requires disruption of source networks. Malinformation raises questions about privacy and context.

Most misinformation research has focused on disinformation—deliberate falsehood. But the MIT study showed that humans voluntarily spreading misinformation are the primary vector. Solving disinformation without solving the human appetite for false novelty may not solve the problem.

The Political Asymmetry

A politically charged finding: multiple studies have found that conservatives share more misinformation than liberals.

This finding is contested—both methodologically (how do you define "misinformation"?) and politically (researchers may have biases). But the pattern appears across multiple studies using different methodologies.

Possible explanations:

Media ecosystem differences. Conservative media is more siloed, with fewer shared sources with mainstream media. This creates more space for unverified claims to circulate.

Psychological differences. Some research suggests conservatives are more motivated by threat detection, which may make them more susceptible to alarming (and often false) claims.

Platform dynamics. The platforms' content moderation may have created selection effects, pushing certain content to alternative platforms with fewer safeguards.

Historical asymmetry. One party's leader may have simply generated more false claims than the other, creating a supply-side asymmetry.

Whatever the explanation, the asymmetry complicates solutions. Interventions that appear politically neutral may have politically asymmetric effects, generating accusations of bias.

What Works?

Research suggests some interventions help:

Accuracy prompts. Simply asking people to think about accuracy before sharing reduces misinformation spread. The nudge is small but measurable.

Friction. Adding steps before sharing—"are you sure you want to share this article you haven't read?"—reduces unthoughtful sharing.

Source labeling. Indicating that content comes from unreliable sources reduces its spread. But determining reliability is contested.

Network disruption. Removing superspreader accounts significantly reduces misinformation flow. But determining whom to remove is contested.

Prebunking. Inoculation against manipulation techniques (covered in the previous article) reduces susceptibility.

Alternative amplification. Boosting accurate content rather than just demoting false content changes the information mix.

No intervention is complete. The structural advantages of misinformation remain. But the combination of multiple interventions can shift the balance.

The Takeaway

False news spreads further, faster, and deeper than true news—driven primarily by humans, not bots. The structural features of social media platforms favor misinformation: algorithmic amplification, frictionless sharing, social proof at scale.

Debunking alone is insufficient because it fights uphill against familiarity, motivated reasoning, and asymmetric reach. Prebunking and structural interventions are more promising.

The information ecosystem selects for engagement, not truth. Until that selection pressure changes, lies will continue to have structural advantages.

Understanding this is necessary for navigating the modern information environment. You're not in a truth-seeking system. You're in an engagement-maximizing system that happens to spread truths when they're engaging and falsehoods when they're more so.

Further Reading

- Vosoughi, S., Roy, D., & Aral, S. (2018). "The spread of true and false news online." Science. - Wardle, C., & Derakhshan, H. (2017). "Information Disorder." Council of Europe. - Pennycook, G., & Rand, D. G. (2021). "The Psychology of Fake News." Trends in Cognitive Sciences.

This is Part 6 of the Propaganda and Persuasion Science series. Next: "Computational Propaganda"

Comments ()