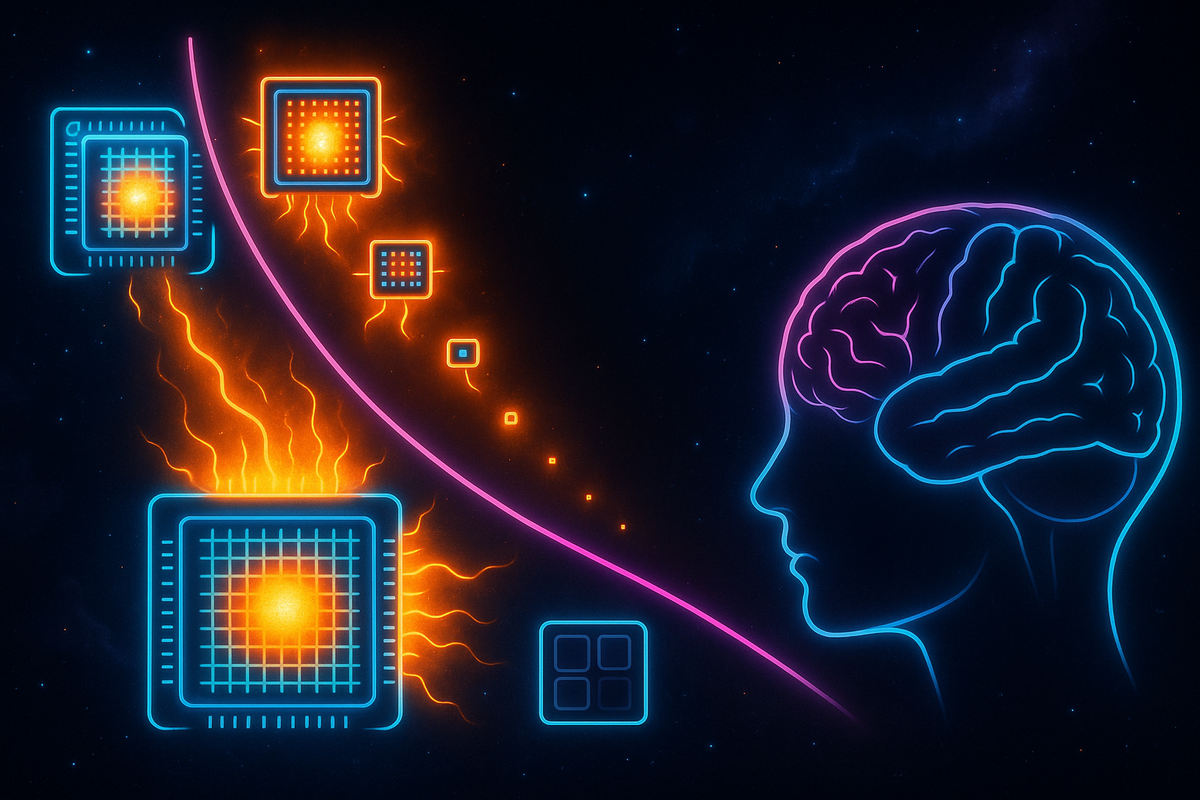

Moore's Law: Energy Per Computation

In 1965, Gordon Moore made a prediction that would shape the next half-century of civilization: the number of transistors on a chip would double roughly every two years. He was right. A modern smartphone contains more transistors than all the computers on Earth did in 1970.

But here's the thing Moore didn't mention, the part that actually mattered for what came next: doubling transistors without doubling power consumption. The real magic wasn't cramming more switches onto silicon. It was making each switch cheaper to flip.

This is the story of computation's energy diet—and why that diet is ending.

The Hidden Law Inside Moore's Law

Moore's Law gets all the press. But the law that actually enabled the computer revolution was discovered by Robert Dennard at IBM in 1974.

Dennard scaling says that as transistors shrink, their power consumption shrinks proportionally. Make a transistor half the size, and it uses about half the voltage and half the current. The power per transistor drops by roughly 75%.

This seems like a minor technical detail. It's not. It's the entire reason you have a smartphone instead of a desktop, a laptop instead of a mainframe, an Apple Watch instead of nothing at all.

Without Dennard scaling, shrinking transistors would just give you hotter chips. Twice the transistors would mean twice the power, twice the heat. Cooling would become the bottleneck. Beyond a certain density, chips would simply melt.

With Dennard scaling, you got a free lunch. Twice the transistors at the same power. Or the same number of transistors at half the power. The joule-per-operation dropped and kept dropping. Every two years, computation got either twice as dense or half as expensive in energy terms.

This is why your phone—with billions of transistors running billions of operations per second—can run on a battery for a day. The energy cost of a single transistor switch has dropped by a factor of about 10 million since the 1970s.

The Efficiency Revolution Nobody Noticed

Between 1970 and 2005, something unprecedented happened: we got radically better at converting electricity into computation.

A 1970s mainframe might consume 100 kilowatts to perform a million operations per second. Today's processors perform trillions of operations per second on a few hundred watts. That's not a modest improvement. It's roughly a billion-fold increase in computational efficiency over 50 years.

Nothing else in human technology has improved that fast. Internal combustion engines improved maybe 2x in efficiency over the same period. Lighting went from incandescent to LED, gaining perhaps 10x. But computation improved by factors of billions.

This is the hidden energy story of the information age. We didn't just get faster computers. We got computationally cheaper thoughts.

Consider what this enabled: - The entire internet runs on maybe 2-3% of global electricity - A Google search costs about 0.3 watt-hours—the equivalent of turning on a small light bulb for 11 seconds - Training a large language model costs millions of dollars in electricity, but running it costs fractions of a cent per query - Every digital operation you've ever done—every email, every video, every transaction—required orders of magnitude less energy than any previous information technology

When people worry about AI's energy consumption (a reasonable worry, which we'll get to), they often miss that AI is possible at all only because of this efficiency revolution. If computation still cost what it cost in 1980, a single ChatGPT conversation would require a power plant.

The Death of Dennard Scaling

In 2006, something broke.

Engineers at Intel and AMD noticed that the Dennard scaling roadmap was failing. As transistors shrank below about 65 nanometers, the physics changed. Electrons started doing things they weren't supposed to do—tunneling through insulators, leaking where they shouldn't leak.

The technical term is "leakage current." The intuitive explanation: when transistors get small enough, quantum effects start mattering. Electrons don't respect boundaries the way they do at larger scales. They seep through barriers that classical physics says should stop them.

The result: smaller transistors no longer meant proportionally lower power. The free lunch was over.

Moore's Law continued. Dennard scaling ended. Transistors kept shrinking, but power stopped dropping proportionally.

The consequences were immediate:

1. The clockspeed plateau. Processor frequencies had been climbing for decades—from kilohertz in the 1970s to megahertz in the 1980s to gigahertz in the 2000s. Then they stopped. We've been stuck at roughly 3-5 GHz since 2005. Not because we can't make faster transistors, but because we can't cool them.

2. The multicore pivot. Unable to make individual processors faster, chip designers started putting multiple processors on the same chip. If you can't speed up each horse, hitch more horses to the wagon. This is why your laptop has 8 cores instead of one very fast one.

3. The dark silicon problem. Chips have so many transistors that they can't all be powered simultaneously without overheating. Modern processors have "dark silicon"—transistors that must remain off to keep the chip cool. We build more than we can use.

4. The power wall. Data centers started hitting limits not of computation but of power and cooling. The energy cost per operation stopped falling. Computation kept getting denser but not more efficient.

Energy Becomes the Bottleneck

For 50 years, the primary constraint on computation was transistor density. We kept making things smaller, and performance improved.

Now the primary constraint is energy. We can pack more transistors onto chips, but we can't power them all. We can build bigger data centers, but we can't cool them. We can train larger AI models, but the electricity bills become problematic.

The AI moment arrived just as the efficiency revolution ended. The irony is sharp. We finally have algorithms that can do remarkable things—understand language, generate images, reason about problems—and they arrived exactly when energy was becoming expensive again.

GPT-3 training consumed an estimated 1.3 gigawatt-hours of electricity. GPT-4 consumed significantly more (OpenAI won't say how much, which tells you something). Each generation requires roughly 10x more compute. If efficiency isn't improving, that means 10x more energy.

The AI labs are building their own power plants now. That's not metaphor. Microsoft, Google, and Amazon are all investing in nuclear power specifically to run their data centers. They've calculated that the energy grid can't supply what they need, so they're building dedicated generation capacity.

This is a remarkable historical inversion. For decades, computation improved so fast that energy barely mattered. Now computation is hitting a wall, and energy determines what's possible.

The Joule-Per-Flop Metric

Engineers have a term for this: joule per FLOP (floating-point operation). It measures how much energy is required for a single computational operation.

In 1980, a floating-point operation cost roughly a microjoule—a millionth of a joule. By 2010, it cost about a picojoule—a trillionth. A million-fold improvement.

Since 2010? The improvement has been maybe 10x. And that's from increasingly exotic techniques—specialized AI chips, new materials, clever architectures. The easy gains from shrinking transistors are gone.

The brain offers a useful comparison. The human brain performs roughly 10^15 operations per second (estimates vary wildly, but this is a common figure) on about 20 watts of power. That's about 2 x 10^-17 joules per operation, or 20 attojoules.

Current silicon is at roughly 10^-12 joules per operation, or 1 picojoule. The brain is about 100,000 times more energy-efficient than silicon.

This efficiency gap is why neuromorphic computing—chips designed to mimic neural architecture—is getting serious attention. If we can't improve standard silicon efficiency much further, maybe we can learn from the system that evolution already optimized for energy-efficient computation.

Three Futures

Where does computation go from here? The energy constraint suggests three possible paths:

Path 1: Nuclear-powered AI. The big tech companies seem to be betting here. Build enough nuclear plants, and you can brute-force your way to more computation. This works but has obvious problems: nuclear is expensive, slow to build, and politically contentious. If AI progress depends on reactor construction, it may slow significantly.

Path 2: Algorithmic efficiency. Make the software smarter to compensate for hardware stalling. There's evidence this is possible—some recent AI models achieve similar performance to larger predecessors with 10-100x less compute. But algorithmic improvements are unpredictable. You can't roadmap insights.

Path 3: New computing paradigms. Neuromorphic chips, optical computing, quantum computing, biological computing (using actual neurons). Each promises dramatic efficiency gains. None is ready for mainstream use. The timeline for any of them to replace silicon is measured in decades, not years.

The likely reality is some combination of all three, plus efficiency gains from better data center design, improved cooling, and optimized power delivery. Progress will continue, but slower than we're used to.

What This Means for Intelligence

Here's why this matters for a series on energy and civilization:

Intelligence has an energy cost. Thinking—whether biological or artificial—requires joules. The more thinking, the more joules.

For 50 years, the cost dropped so fast we could ignore it. Computation felt like it was becoming free. We built business models on the assumption that processing was effectively costless compared to other inputs.

That assumption is ending. Computation has a floor—a minimum energy cost below which physics doesn't let you go. We're not at that floor yet, but we're approaching it.

The entities that will be able to think the most—run the most AI, process the most data, train the most models—will be the entities with access to the most energy. This was always true in some theoretical sense. It's becoming practically true now.

This connects computation back to the energy story we've been telling. The steam engine broke the muscle ceiling. The electrical grid distributed power universally. Oil enabled mobility. And now, the end of Dennard scaling is making computation an energy-intensive activity again.

The next article will take this logic to its planetary conclusion: what if we classified civilizations not by their political systems or cultural achievements, but by how much energy they could command? That's the Kardashev scale, and it reframes everything we've been discussing.

Further Reading

- Dennard, R. H. et al. (1974). "Design of Ion-Implanted MOSFETs with Very Small Physical Dimensions." IEEE Journal of Solid-State Circuits. - Esmaeilzadeh, H. et al. (2011). "Dark Silicon and the End of Multicore Scaling." IEEE Micro. - Thompson, N. C. et al. (2020). "The Decline of Computers as a General Purpose Technology." MIT Working Paper.

This is Part 7 of the Energy of Civilization series. Next: "Kardashev Scale: Civilizations as Energy Consumers."

Comments ()