Synthesis: Engineering What Spreads

This series has laid out the mechanics: how beliefs infect through networks, how the distinction between simple and complex contagions determines what can spread, how emotions propagate beneath conscious awareness, how cascades can detach consensus from truth, how network topology creates the physics of transmission, how R0 determines survival.

Now the question: what do we do with this knowledge?

The answer depends on what you're trying to accomplish. Are you trying to spread something? Stop something from spreading? Understand your own susceptibility? Design systems that handle contagion better?

Each goal implies different applications of the same science.

If You're Trying to Spread Something

The framework suggests a design process:

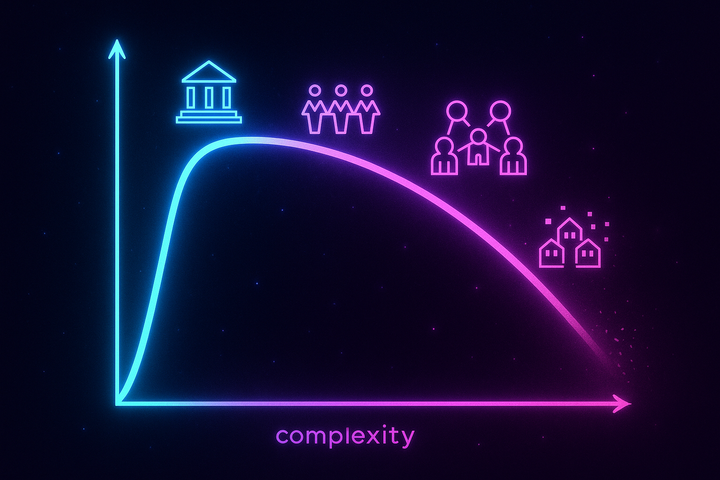

Step 1: Classify your contagion. Is this a simple contagion (spreading on single exposure) or a complex contagion (requiring reinforcement)? Information awareness is simple. Behavior change is complex. Political beliefs are often hybrid—easy to shift superficially, hard to convert into action.

Step 2: Match strategy to type. For simple contagions: maximize reach, bridge clusters, go viral. For complex contagions: maximize density, create reinforcement clusters, build local saturation before attempting scale. The viral playbook only works for simple contagions.

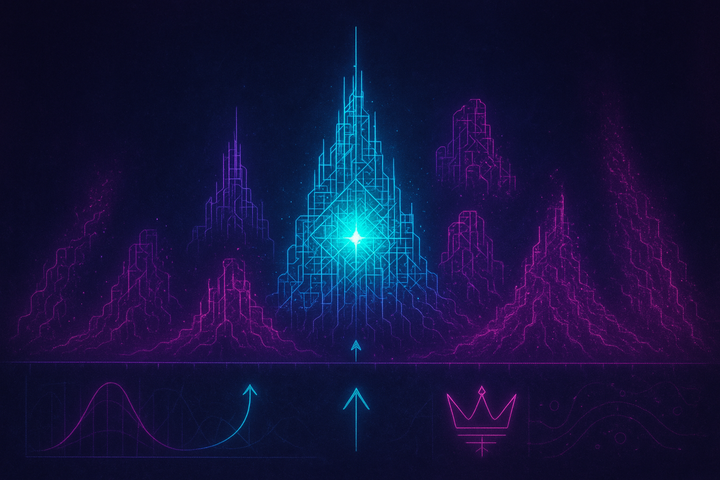

Step 3: Design for R0 > 1. Transmissibility × contacts × duration. Make your idea simple enough to transmit without degradation. Target high-connectivity nodes for initial seeding. Tie the idea to ongoing concerns so it persists. If any component is too low, the spread dies.

Step 4: Seed the right clusters. Early conditions matter. For cascades, the first few adopters determine the direction. Identify communities where your idea fits existing identities and interests. Start there; let them amplify.

Step 5: Account for network topology. Map the network if you can. Identify hubs, bridges, density patterns. Target super-spreaders. Create wide bridges between clusters if you need complex contagion to cross.

This is ethically neutral advice. It works for public health campaigns and propaganda, for social movements and scams. The science of spread doesn't care about the content being spread.

If You're Trying to Stop Something

The inverse logic applies:

For simple contagions: Increase path length. Remove bridges. Quarantine hubs. This is the logic of social distancing—make the network harder to traverse. The same principle works for information: make it harder for bad ideas to bridge into new clusters.

For complex contagions: Decrease clustering. Introduce diversity. Prevent local saturation. Complex contagions need reinforcement, so the intervention is to prevent the social proof that triggers adoption.

For cascades: Disrupt early. Introduce contradicting signals before consensus locks in. Surface private information that contradicts the emerging cascade direction. The later you intervene, the harder it is—cascade momentum builds.

For emotional contagion: Limit exposure. Curate feeds. Create emotional firewalls. The simplest intervention is just reducing the volume of emotional content reaching vulnerable populations.

Target the super-spreaders. In any network-mediated spread, a small number of nodes generate disproportionate transmission. Identify them. If you can change their behavior—debunk to them first, moderate them, remove their amplification—you disproportionately reduce spread.

The challenge: these interventions can be authoritarian. Removing bridges is censorship. Quarantining hubs is deplatforming. Introducing contradicting signals is counter-propaganda. The tools for stopping harmful spread are also tools for suppressing legitimate dissent.

If You're Trying to Understand Yourself

The framework has implications for personal epistemics:

You're not thinking independently. Your beliefs, emotions, and behaviors are substantially network-determined. The question isn't whether you're influenced—you are—but whether you can recognize the influence and compensate.

Your opinions came from somewhere. Trace them back. Who did you hear this from? Who did they hear it from? Is there independent verification, or are you at the end of a cascade chain where everyone is copying everyone else with no anchor to reality?

Your emotions aren't fully yours. The baseline mood you feel is partly inherited from your network. If everyone you follow online is angry, you'll be angry. This is measurable. The intervention is simple: change your inputs.

Your network position shapes your exposure. Where you sit in the network—hub or periphery, bridge or cluster-member—determines what reaches you. Some people are nodes that encounter everything; others are sheltered by network structure. Neither is better; both should be aware.

You can engineer your own susceptibility. Diverse information sources. Strong local ties that buffer against weak-tie contagion. Skepticism about cascades. Explicit effort to seek disconfirming evidence. These are network-aware epistemic hygiene practices.

The goal isn't to become immune to influence—that's impossible for social primates. The goal is to become a more selective filter, better at catching low-quality contagions while remaining open to high-quality ones.

If You're Designing Systems

The implications for platform design, policy, and institutional architecture:

Algorithms are contagion engines. Social media algorithms optimize for engagement, which optimizes for emotional intensity, which optimizes for contagion. If you're designing a platform, your algorithmic choices create the R0 environment for every idea on the platform. You are shaping what can spread.

Engagement ≠ value. The current paradigm assumes that what people click on is what they want. But contagion dynamics mean that what spreads is what's designed to spread—not necessarily what's true, useful, or wanted on reflection. Platforms optimized for engagement become pathogen nurseries.

Friction can be good. Reducing transmission (adding verification steps, slowing resharing, limiting reach) reduces R0 across the board. This slows good ideas too, but it particularly slows high-R0 junk that depended on frictionless transmission. The tradeoff may be worth it.

Network structure is policy. Who can follow whom, how content gets distributed, what recommendations the algorithm makes—these determine network topology, which determines contagion dynamics. Platform architecture is implicitly an intervention on every spreading process that occurs within it.

Transparency helps. If users can see cascade dynamics—can see that a trending topic started with coordinated accounts, can see that their feed is tilted toward outrage—they can compensate. Opacity protects manipulation.

Heterogeneity resists cascades. Systems that preserve diverse initial conditions, that don't homogenize quickly, that maintain independent information sources—these are resistant to cascade lock-in. Monocultures are vulnerable.

The Uncomfortable Truths

This science reveals things we'd rather not know:

Truth doesn't win automatically. False ideas with high R0 beat true ideas with low R0. Reality doesn't have privileged access to transmission dynamics. The epistemologically correct can lose to the virally optimized. This isn't cynicism—it's arithmetic. A lie engineered for spread beats a truth that isn't.

Individual rationality ≠ collective rationality. Every mechanism in this series involves individually rational choices producing collectively irrational outcomes. Copying others is smart; universal copying produces cascades. Updating on social proof is reasonable; universal updating produces contagion. The aggregation structure is the problem. You can't fix it by making individuals smarter—you have to fix the aggregation structure.

Awareness isn't enough. Knowing these dynamics doesn't make you immune to them. You can understand contagion perfectly and still catch emotions from your feed. You can know about cascades and still update on perceived consensus. The mechanisms operate beneath conscious correction. Understanding is necessary but not sufficient.

Free will is contextual. In certain network configurations, you'll adopt certain beliefs. Change the configuration, you'll adopt different beliefs. This isn't metaphysics—it's the measurable effect of network position on individual cognition. Whatever "free will" means, it doesn't mean independence from these forces.

The tools of liberation are the tools of control. Everything in this series can be used to help people—better health behaviors, truer beliefs, appropriate emotions—or to manipulate them. The science doesn't discriminate. Propaganda bureaus and public health agencies read the same papers.

The Historical Moment

We're at a peculiar point in the history of contagion science.

For most of human history, social contagion operated at local scale, limited by the speed of travel and the bandwidth of face-to-face communication. Ideas could spread, but slowly. Emotional contagion was bounded by the size of crowds. Cascades could form, but they took months or years to reach scale.

Social media changed the physics. Now:

- Contact rates are orders of magnitude higher. You're exposed to more idea-carriers per day than your great-grandparents were in a year. - Transmission latency collapsed. An idea can circle the globe before correction catches up. - Network topology shifted toward sparser global connections, degrading complex contagion while accelerating simple contagion. - Algorithmic curation introduced a new kind of selection pressure, optimizing for engagement regardless of truth or health.

We're running ancient emotional and cognitive software on modern network hardware. The software evolved for small groups, slow transmission, strong ties. The hardware delivers weak ties at scale, optimized for intensity. The mismatch produces pathologies we're only beginning to understand.

This isn't technopessimism. The same infrastructure that spreads outrage can spread solidarity. The same dynamics that lock in false cascades can lock in true ones. The network is a channel; what flows through it is partly up to us. But only partly. And only if we understand the physics.

The Interventions We Need

Given everything in this series, what would actually help?

Platform reform that accounts for contagion. Engagement optimization as the sole metric produces the pathologies we're seeing. Alternative metrics—time well spent, verified accuracy, emotional health—could reshape the R0 environment. This requires either regulation or competitive pressure that doesn't yet exist.

Media literacy that includes network dynamics. Current media literacy focuses on evaluating individual sources. That's necessary but insufficient. People also need to understand cascade dynamics, emotional contagion, the difference between simple and complex spread. "Is this source credible?" is the wrong question. "Am I at the end of a cascade chain?" is closer.

Institutional designs that preserve diversity. Cascades thrive on homogeneity. Institutions that deliberately cultivate dissent, red teams, devil's advocates—these are structural resistance to lock-in. Most institutions do the opposite, rewarding consensus and punishing deviation.

Personal network curation. You can't opt out of network influence. But you can choose your networks more deliberately. Diverse information sources. Strong local communities. Conscious limits on emotional-contagion vectors. This isn't isolation—it's selective permeability.

Research that maps the territory. Network science is still young. We know a lot about what spreads and why, but we know less about effective intervention. The empirical base for what actually reduces harmful contagion while preserving beneficial spread is thin. We need more experiments, more data, more refined models.

What Survives

Amid the complications, some principles hold:

Diversity is resilience. Networks with diverse information sources, diverse viewpoints, diverse initial conditions resist cascade lock-in and unhealthy convergence. Homogeneity is fragility.

Local matters for behavior. If you want to change what people do (not just what they think), you need dense clusters, strong ties, reinforcement. Viral reach is insufficient; depth is necessary.

Structure shapes content. The architecture of our social networks—their topology, their algorithms, their friction—determines what can spread. You can't understand what people believe without understanding the network that delivered those beliefs.

You are not separate. The individual-as-island model doesn't survive contact with the science. You're a node, embedded, influenced, influencing. The boundaries of yourself are more porous than you experience them to be.

The Meta-Lesson

This series has been an exercise in exactly the phenomenon it describes.

These ideas—about contagion, networks, cascades—are themselves ideas that spread or die by the rules described within them. This synthesis is optimized for a certain R0: simplified enough to transmit, emotionally engaging enough to motivate sharing, long-duration because the topic matters persistently.

Whether you adopt these frameworks depends on your network position, your prior beliefs, your threshold for complex versus simple reception. If everyone you know already believes in network effects, you'll find this confirming. If no one does, you'll find it alien.

The science of social contagion is subject to social contagion.

What survives from this series will be the ideas that achieve R0 > 1 in your network—the ideas that lodge, that you share, that get reinforced, that become part of how you see social dynamics.

Whether that's the truth, or just what spreads, is a question the science itself cannot answer. But at least now you know the question to ask.

Core Takeaways

1. Simple contagions spread through weak ties and bridges. Complex contagions need strong ties and clustering. Most interventions fail because they confuse the two.

2. Emotional contagion is automatic and amplified online. The internet became a mood-synchronization machine optimized for intensity, which is often negative.

3. Cascades can detach belief from evidence. Sequential observation creates path dependency where early signals get locked in regardless of truth.

4. Network topology determines the physics of spread. Small-world structure, hub dominance, clustering patterns—these constrain what can propagate.

5. R0 > 1 is survival. R0 < 1 is extinction. For ideas, as for pathogens. The factors determining R0 can be engineered—for good or ill.

6. You are a node. Your beliefs and emotions are partly network-inherited. Awareness helps; immunity doesn't exist.

This concludes the Network Contagion series. The next series explores Economics of Trust—how cooperation emerges, persists, and collapses in social systems.

Comments ()