Nudge Theory: Choice Architecture

In 2008, economist Richard Thaler and legal scholar Cass Sunstein published Nudge, arguing that how choices are presented—the "choice architecture"—powerfully shapes what people choose.

The book sparked a revolution in policy and became controversial for the same reason: it made explicit what had always been implicit. Every choice is presented somehow. That presentation influences decisions. There's no neutral way to offer a choice.

If choice architecture is unavoidable, Thaler and Sunstein argued, we might as well design it thoughtfully. They called this "libertarian paternalism"—preserving freedom while steering toward better outcomes.

The implications for understanding influence are profound. You're being nudged constantly. The question is whether you notice.

The Basics of Nudging

A nudge is any aspect of choice architecture that alters behavior in predictable ways without forbidding options or significantly changing economic incentives.

Key examples:

Defaults. The most powerful nudge. Organ donation rates are 15% in countries with opt-in systems and 90% in countries with opt-out systems. Same choice, different default, radically different outcomes. Retirement savings follow the same pattern—automatic enrollment dramatically increases participation.

Framing. The same information presented differently produces different decisions. "90% survival rate" feels safer than "10% mortality rate." Framing is unavoidable—you have to present information somehow—and it shapes choice.

Social proof. "Most people in your neighborhood conserve energy." This peer comparison nudges conservation. We're influenced by what others do, and making that visible changes behavior.

Salience. What's prominent gets attention. Putting healthy food at eye level increases healthy choices. Sending a text reminder before a doctor's appointment reduces no-shows. Making information salient changes behavior.

Friction. Adding small obstacles reduces behavior; removing them increases it. Requiring an extra click to unsubscribe reduces unsubscribes. Pre-filling forms increases completion. Friction is a powerful, often invisible nudge.

Feedback. Real-time information about behavior changes behavior. Energy monitors showing current usage reduce consumption. Speed display signs reduce speeding. Feedback closes the loop between action and consequence.

None of these forbid anything. All of them shift behavior significantly.

Why Nudges Work

Nudges exploit known features of human psychology:

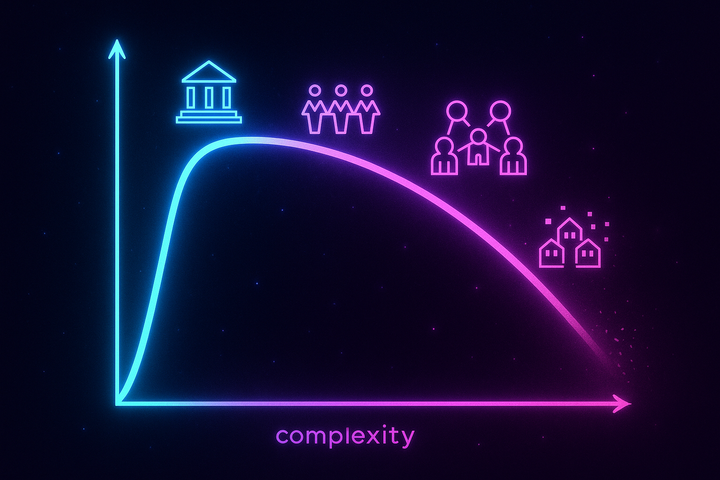

Bounded rationality. We can't process all information. We use shortcuts. Nudges work with shortcuts rather than against them.

Present bias. We overweight immediate rewards and underweight future consequences. Defaults can counteract this by setting up future-beneficial choices now.

Status quo bias. We tend to stick with the current state. Defaults exploit this—people stay with whatever's pre-selected.

Loss aversion. Losses feel larger than equivalent gains. Framing choices as avoiding losses rather than achieving gains increases compliance.

Social influence. We're influenced by what others do. Social proof nudges leverage this.

Attention limits. We can't attend to everything. Salience nudges direct limited attention.

These aren't bugs in human cognition—they're features. They evolved because they're often adaptive. Nudges work by aligning with how minds actually work, rather than how rational actor models assume they work.

The Paternalism Problem

Nudge theory was immediately controversial. Critics saw it as manipulation dressed up as policy.

The libertarian critique: Even if nudges preserve choice, they shape it. If the government or corporations are designing choice architecture to get particular outcomes, that's paternalism—someone else deciding what's good for you.

The manipulation critique: Nudges work by exploiting psychological weaknesses. Even if the outcomes are good, is it ethical to manipulate people toward them? Does "libertarian paternalism" just legitimize manipulation?

The slippery slope: Once you accept that experts should design choice architecture, where does it stop? Who decides what outcomes are desirable? Nudges against smoking seem benign; nudges favoring particular political views seem dystopian. Where's the line?

The transparency problem: If nudges work best when people aren't aware of them, there's a tension with informed consent. Should people be told they're being nudged? But if they're told, the nudge may not work.

Thaler and Sunstein addressed these concerns by emphasizing transparency and easy reversal—nudges should be disclosed, and people should be able to opt out easily. But critics argued this doesn't resolve the fundamental issue: someone is designing the choice environment to produce particular outcomes.

Nudges in the Wild

Whether you endorse them or not, nudges are everywhere:

Grocery stores are choice architecture. Product placement, shelf height, checkout displays—all designed to nudge purchases. The store layout is a persuasion map.

Websites are choice architecture. Default settings, button placement, color choices, the sequence of options—all designed to nudge clicks. Dark patterns are nudges designed to benefit the company at the user's expense.

Insurance enrollment is choice architecture. Default plans, information presentation, comparison tools—all affect what people choose. The ACA marketplaces were designed with nudge principles.

Tax policy is choice architecture. Standard deductions are defaults. Automatic withholding is a default. The complexity of forms is friction.

Social media is choice architecture. Infinite scroll, notification defaults, content ordering—all designed to maximize engagement. You're being nudged toward addiction.

The question isn't whether choice architecture shapes behavior—it does. The question is who's designing it and for whose benefit.

Dark Patterns

Nudge theory exposed an uncomfortable reality: the same principles that can help people can harm them.

"Dark patterns" are choice architectures designed to benefit the designer at the user's expense:

Hidden costs. Reveal fees only at checkout, after commitment.

Forced continuity. Make subscriptions easy to start, hard to cancel.

Trick questions. Use confusing wording so opt-outs feel like opt-ins.

Confirmshaming. Make the opt-out option embarrassing: "No thanks, I prefer to stay uninformed."

Roach motels. Easy to enter, nearly impossible to leave.

Privacy zuckering. Default to maximum data sharing; bury privacy options in complex settings.

These are nudges—they don't forbid anything, but they powerfully shape behavior. They're ubiquitous online. And they illustrate that nudge principles are tools, not values. The same psychology works for help or harm.

The Arms Race

Understanding nudges creates an arms race.

Designers learn more sophisticated ways to shape choices. A/B testing allows continuous optimization of choice architecture. Machine learning personalizes nudges to individual vulnerabilities.

Users who understand nudges can resist them. Awareness of defaults makes you more likely to examine them. Understanding framing makes you less susceptible to it. Knowledge is partial defense.

Regulators try to constrain harmful nudges. GDPR's consent requirements attempt to reset defaults around privacy. But regulation lags design, and sophisticated dark patterns evade simple rules.

The sophistication of choice architecture is increasing faster than the sophistication of user defenses. The asymmetry Bernays created—experts manipulating masses—continues in new forms.

Nudge Yourself

One application of nudge theory: design your own choice architecture.

Set defaults that serve you. Remove apps that waste time. Set savings to automatic. Make the healthy choice the easy choice.

Increase friction for bad choices. Delete the credit card from the shopping site. Put junk food on a high shelf. Add steps between impulse and action.

Create commitment devices. Tell people your goals (accountability). Use apps that penalize failure. Create external constraints on future behavior.

Use social proof. Surround yourself with people who model the behaviors you want. The people you see shape what feels normal.

Manage attention. Turn off notifications. Remove red badges. Control what gets salience in your environment.

This is defensive choice architecture—using nudge principles on yourself rather than being nudged by others. It's not complete protection, but it shifts some control back.

The Policy Battlefield

Nudge theory became policy reality. The UK created the "Behavioural Insights Team" (the "Nudge Unit") in 2010. The US established the Social and Behavioral Sciences Team in 2015. Similar units exist in dozens of countries.

Applications include:

Tax collection. Letters telling people "most of your neighbors have paid" increased compliance significantly. The UK collected hundreds of millions in additional revenue through simple rewording.

Energy conservation. Social comparison statements on bills reduced consumption. Feedback apps made usage visible, changing behavior.

Health behaviors. Text reminders increased vaccination rates. Default options in cafeterias increased vegetable consumption. Stair prompts increased physical activity.

Financial decisions. Automatic enrollment in retirement plans dramatically increased savings. Simplified disclosures improved mortgage choices.

These successes also generated backlash. Critics called it "nanny state" manipulation. Others questioned whether changing behavior without changing understanding was truly beneficial. The political valence of nudging became contested.

But the genie can't go back in the bottle. Nudge principles are now standard tools in policy design, marketing, UX, and persuasion. Whether you call it "behavioral insights" or "manipulation," choice architecture is being designed everywhere, by everyone, for every purpose.

The Takeaway

Choice architecture shapes decisions. This is unavoidable—every choice is presented somehow, and the presentation influences the choice.

Nudge theory made this explicit and controversial. Defaults, framing, social proof, salience, friction—these tools can help people make better decisions or manipulate them into worse ones.

The same principles serve governments trying to increase retirement savings and corporations trying to maximize data extraction. The psychology is neutral; the application is ethical or exploitative.

You're being nudged constantly—by platforms, stores, governments, and institutions. Awareness doesn't provide immunity, but it provides some defense. And you can apply the same principles to nudge yourself toward the outcomes you actually want.

Further Reading

- Thaler, R. H., & Sunstein, C. R. (2008). Nudge. Yale University Press. - Kahneman, D. (2011). Thinking, Fast and Slow. Farrar, Straus and Giroux. - Brignull, H. "Dark Patterns." darkpatterns.org.

This is Part 4 of the Propaganda and Persuasion Science series. Next: "Inoculation Theory"

Comments ()