Building Your Mental Immune System

You've now studied the technology of influence: Bernays showed how to engineer desire. Chomsky mapped how institutions filter information. Nudge theory revealed how choice architecture shapes decisions. Inoculation research proved you can vaccinate against manipulation. Misinformation studies documented how lies outcompete truth. Computational propaganda demonstrated influence operations at industrial scale.

The question now: What do you do with this knowledge?

This isn't about becoming cynical—dismissing everything as propaganda leads to a different kind of helplessness. It's about developing sophisticated defenses. The goal is a mental immune system: able to detect threats, respond appropriately, and maintain function in a hostile information environment.

The Defensive Toolkit

Each piece of research we've covered suggests specific defensive practices.

From inoculation theory: Pre-exposure to manipulation techniques builds resistance. You don't need to encounter every specific lie—you need to understand the techniques that make lies persuasive. Once you recognize emotional manipulation, false dilemmas, and misleading statistics, you can identify them in novel contexts.

From misinformation research: The structural advantages of falsehood mean debunking will always lag. Your defense isn't fact-checking everything (impossible) but developing heuristics for when to be suspicious. What triggers your verification reflex matters more than your verification skill.

From computational propaganda: You're competing with software for your attention and beliefs. Social proof is gameable. Apparent consensus may be manufactured. The response isn't paranoia but probabilistic thinking—how likely is it that this apparent groundswell is organic?

From nudge theory: Choice architecture shapes your decisions constantly. The question isn't whether you're being nudged but by whom and toward what. Recognizing the architecture is the first step to choosing despite it.

From manufacturing consent: Media coverage reflects institutional pressures, not just truth-seeking. The same event can be covered or ignored, emphasized or minimized, framed as crime or policy failure. Consuming a single source means accepting a single institution's filters.

From Bernays: Public relations is propaganda by another name. Institutions spend billions annually to shape your perceptions. That investment implies expected return. Ask who benefits from the belief being promoted.

The Verification Hierarchy

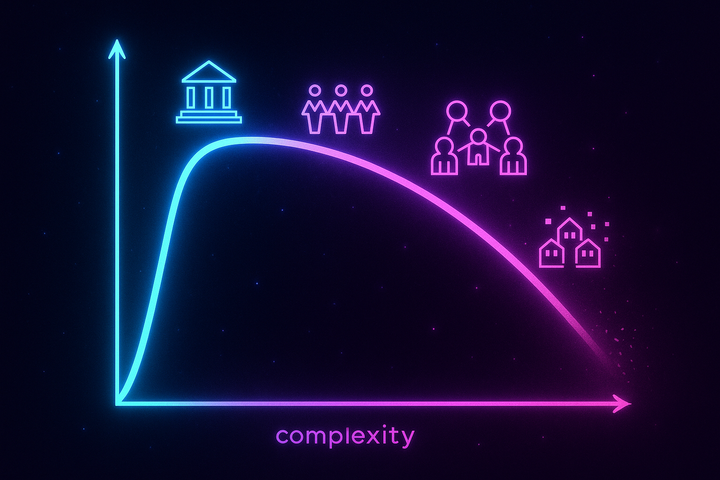

Not everything deserves equal scrutiny. You need a triage system.

Tier 1: Automatic acceptance. Some information you accept without verification. This is necessary—you can't fact-check everything. The key is calibrating what reaches this tier. Personal experience, trusted relationships, established scientific consensus, direct observation. Even here, you can be wrong—but constant vigilance is unsustainable.

Tier 2: Passive awareness. Most information sits here. You note it without fully accepting or rejecting. If it matters later, you can investigate. Most of what you scroll past should stay here—acknowledged but not integrated into your beliefs.

Tier 3: Active verification. Some claims warrant investigation before acceptance. These include: claims that would significantly change your beliefs if true, claims that trigger strong emotional reactions, claims from unknown sources, claims that benefit identifiable parties, claims that spread rapidly.

Tier 4: Default skepticism. Some categories of claim require positive evidence before any credence. These include: conspiracy theories requiring many actors to stay silent, claims about enemies that perfectly match existing narratives, information from known propaganda sources, viral content from anonymous accounts.

The skill isn't being skeptical of everything—that's as dysfunction as credulity. The skill is calibrating which tier each piece of information belongs in.

Source Triangulation

Single-source information is structurally vulnerable.

Triangulation means seeking multiple independent sources. "Independent" is key—multiple outlets running the same wire story isn't triangulation. Multiple outlets with different institutional pressures and incentive structures reaching similar conclusions provides genuine corroboration.

This applies to:

News consumption. Reading outlets across political perspectives doesn't mean all perspectives are equally valid. It means each outlet has different blind spots and emphases. What multiple perspectives agree on is more likely accurate than what only one perspective claims.

Expert claims. A single expert can be wrong, biased, or captured. Scientific consensus—the convergence of many independent researchers—provides more reliable grounding. But distinguishing genuine consensus from manufactured consensus requires understanding who's doing the research and who's funding it.

Social proof. Apparent agreement across many accounts might reflect genuine opinion or coordinated operation. Triangulate by checking: Are these accounts independent? Do they have authentic histories? Are they human? Social proof from verified independent sources means something. Social proof from potentially coordinated accounts means nothing.

Historical claims. Events from before your memory are accessible only through records. Multiple independent records corroborating each other provide more reliability than any single account, however authoritative it seems.

The Emotional Audit

Your emotions are attack surfaces.

Propaganda works through emotion. Outrage, fear, disgust, hope—these bypass critical thinking. They're designed to. You feel before you think. The manipulation happens in that gap.

The emotional audit: When you feel a strong reaction to information, pause. Ask:

- What emotion am I feeling? - Is this emotion proportionate to the evidence provided? - Who benefits from me feeling this emotion? - Would I share this if I weren't feeling this emotion?

This doesn't mean emotions are always manipulated. Sometimes outrage is appropriate. Sometimes fear is warranted. But emotional reactions should trigger scrutiny, not action. The pause between feeling and acting is where defense happens.

The most sophisticated propaganda doesn't feel like propaganda. It feels like righteousness. It aligns with your values and gives you an enemy to hate. The emotional resonance is the manipulation.

Epistemic Humility

Confidence often correlates inversely with accuracy.

The most dangerous epistemic state is certainty about things you can't verify. Certainty feels good—it resolves ambiguity, enables action, provides identity. But certainty about unverifiable claims is indistinguishable from successful propaganda.

Epistemic humility means:

- Distinguishing between what you know directly and what you've been told - Acknowledging the limits of your verification ability - Holding beliefs provisionally, subject to revision - Recognizing that your information sources have filters you can't fully see

This isn't relativism. Some things are true and some are false. The issue is your access to truth—which is always mediated, always filtered, always incomplete.

The appropriate confidence level for most beliefs about complex topics is "probably, based on my current information"—not "definitely, and anyone who disagrees is deluded."

The Filter Audit

Examine the systems that shape what you see.

Your information environment is constructed. Algorithms select what appears in your feeds. Editorial decisions determine what news outlets cover. Social circles determine what conversations you encounter. Your own choices determine which sources you consult.

Periodically audit your filters:

- What sources do I consult regularly? What are their institutional pressures? - What perspectives are absent from my regular information diet? - What algorithms curate my feeds? What do they optimize for? - When was the last time I encountered information that seriously challenged my views? - What would I need to see to change my mind about my strongest beliefs?

The goal isn't consuming all perspectives equally—some are more reliable than others. The goal is understanding what your current filtering system lets through and what it blocks.

The Defense Budget

Verification has costs. Allocate resources wisely.

Time spent verifying one claim is time not spent on other things. Mental energy is finite. The goal isn't maximum verification but optimal verification—focusing scrutiny where it matters most.

High-stakes claims warrant more verification effort. Claims that would change your vote, your health decisions, your relationships, your major purchases—these deserve thorough investigation.

Low-stakes claims can often be left unverified. Whether a historical anecdote is precisely accurate usually doesn't matter. Save your verification energy for what affects your actual decisions.

Reversible decisions can tolerate lower verification standards than irreversible ones. If you can easily correct a mistake, less certainty is required before acting.

Time-sensitive decisions sometimes require acting with incomplete verification. In these cases, acknowledge the uncertainty rather than manufacturing false confidence.

The defense budget isn't just about preventing false beliefs. It's about maintaining function in an imperfect information environment.

Building Community Defense

Individual defense is necessary but insufficient.

Propaganda targets individuals, but its effects are social. When your neighbors believe falsehoods, it affects you even if you don't. When your information environment fills with garbage, navigating it costs you even when you succeed. You live in a shared epistemic environment—its health affects everyone.

Community defense means:

- Sharing verification skills with people you trust - Creating social norms that reward accuracy over engagement - Supporting institutions that produce reliable information - Calling out manipulation when you see it—but constructively, not punitively - Building trust networks that can resist coordinated manipulation

The social dimension matters because manipulation exploits social dynamics. Coordinated operations target communities, not just individuals. They aim to shift what appears to be consensus, what seems acceptable to believe, what's worth investigating. Individual resistance can't fully counter attacks on the social fabric.

Think of your immediate social network as your first line of community defense. If you can help even a handful of people develop better verification habits, you've improved the information environment you share. If those people help others, the improvement compounds.

You're not just protecting yourself. You're contributing to an information environment where truth has better odds.

The Long Game

The manipulation you face today is primitive compared to what's coming.

AI-generated content will become indistinguishable from human content. Deepfakes will become trivial to produce. Personalized manipulation will target your specific psychological vulnerabilities. The arms race between manipulation and defense will continue escalating.

This means your defense can't depend on specific detection techniques. "Look for these artifacts in deepfakes" will become obsolete when artifacts disappear. The defense has to be structural—habits of verification, networks of trust, calibrated skepticism.

The skills you're developing now—triangulation, emotional auditing, source evaluation, epistemic humility—will remain valuable even as specific threats evolve. They're not about detecting any particular manipulation. They're about maintaining reliable belief-formation in adversarial conditions.

The fundamental problem won't change: actors with interests in your beliefs will try to shape them. The techniques will evolve, but the dynamic is eternal. Any skill that helps you resist manipulation in general—rather than just specific current manipulations—is future-proof.

This is why understanding propaganda theory matters more than memorizing specific propaganda campaigns. The campaigns change; the principles persist. Knowing that emotional triggers bypass critical thinking protects you regardless of which emotions are being triggered. Understanding how social proof can be manufactured protects you regardless of what specific consensus is being faked.

The Takeaway

You've now studied how propaganda works—from Bernays's engineering of desire to computational operations at industrial scale. The common thread: your beliefs are valuable, and sophisticated actors invest heavily in shaping them.

The response isn't cynicism or paranoia. It's developing systematic defenses: triangulating sources, auditing emotions, calibrating confidence, examining filters, allocating verification resources wisely, and building community resilience.

You can't opt out of the information war. You can only choose to fight it consciously.

Your mental immune system won't be perfect. You'll still be fooled sometimes. But the goal isn't perfect defense—it's raising the cost of manipulation high enough that truth has a fighting chance.

The alternative is having your beliefs selected for you by whoever invests most in shaping them. That's not autonomy. It's just propaganda you didn't notice.

Further Reading

- Levitin, D. J. (2016). A Field Guide to Lies: Critical Thinking in the Information Age. Dutton. - Lewandowsky, S., et al. (2012). "Misinformation and Its Correction." Psychological Science in the Public Interest. - Sagan, C. (1996). The Demon-Haunted World: Science as a Candle in the Dark. Random House.

This concludes the Propaganda and Persuasion Science series.

Comments ()