You're Being Engineered: Here's How to Notice

In 2016, Cambridge Analytica harvested data from 87 million Facebook users without their consent. Using psychological profiling based on personality traits, they crafted targeted political advertising designed to manipulate voting behavior.

The scandal broke in 2018. Outrage followed. Cambridge Analytica went bankrupt. Facebook paid billions in fines.

But here's what got lost in the scandal: Cambridge Analytica's techniques weren't unusual. They were just more brazen about using them for politics. The same fundamental approach—psychological profiling plus targeted messaging—is the standard operating model for digital advertising. Every major platform does it. Every advertiser uses it.

You're not being manipulated by rogue operators. You're being manipulated by the system working as designed.

The Engineering Model

Modern influence operates on a simple model:

1. Data collection. Everything you do online generates data. Every click, every scroll, every pause, every purchase, every search—all recorded, analyzed, and stored. The platforms know your interests, your fears, your desires, your vulnerabilities.

2. Psychological profiling. The data is used to build models of who you are. Not just demographics—psychology. Are you open to experience? Conscientious? Neurotic? These traits predict how you'll respond to different messages.

3. Targeted messaging. Based on your profile, you receive messages crafted to influence specifically you. Not mass communication—personalized persuasion. The message I see is different from the message you see, optimized for our respective vulnerabilities.

4. Real-time optimization. Machine learning continuously improves targeting. If a message doesn't work, try another. What gets engagement gets amplified. The system learns what moves you.

This isn't secret. It's the business model that funds the free internet. But the scale and sophistication are hard to grasp until you see it laid out.

The model has evolved beyond simple advertising. It's now applied to:

Political campaigns. Voter targeting uses the same psychological profiling. Different messages for different personality types. Micro-targeting of persuadables. Suppression of opponent's voters through discouragement messaging.

Disinformation operations. Foreign and domestic actors use targeted influence to shape political beliefs. The Russian Internet Research Agency didn't invent the techniques—they just applied commercial targeting to political warfare.

Radicalization pipelines. Extremist content is targeted to vulnerable individuals. The algorithm that serves you cooking videos also serves someone else increasingly radical political content—because engagement is engagement.

Social engineering attacks. Hackers use profiling to craft convincing phishing messages. The personal details that make advertising effective also make fraud effective.

The infrastructure built for selling products is equally useful for selling ideologies, spreading disinformation, or manipulating elections. The commercial system and the manipulation system are the same system.

The Asymmetry

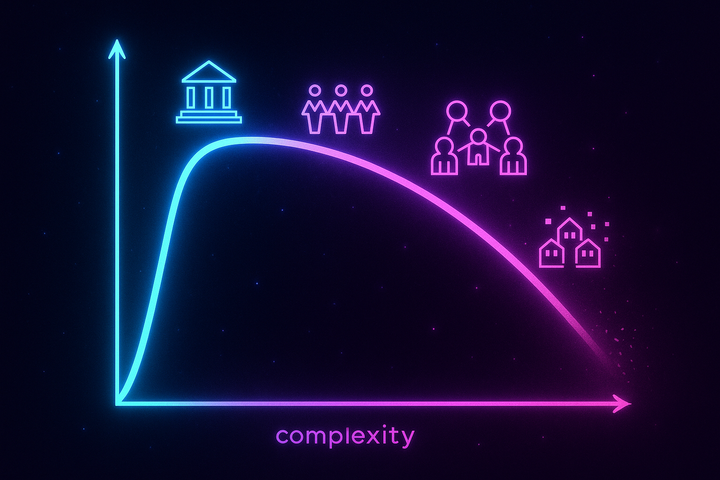

The relationship between you and those trying to influence you is fundamentally asymmetric.

They know about you; you don't know about them. Platforms and advertisers have detailed profiles of your psychology, preferences, and behavior. You have no equivalent insight into their operations.

They have time and resources; you don't. Teams of psychologists, data scientists, and creative professionals work full-time to craft influence. You're just trying to get through your day.

They optimize continuously; you don't adapt. Machine learning means influence operations get better over time. Your defenses remain static unless you actively cultivate them.

They coordinate; you don't. Influence campaigns are orchestrated across platforms and touchpoints. Your attention is fragmented.

This asymmetry means that passive defense—just being "smart" or "skeptical"—isn't enough. The sophistication of modern influence exceeds the capacity of unaided intuition to resist it.

What They Know

The depth of profiling is staggering:

Demographic. Age, gender, location, income, education—the basics. These alone predict a lot.

Behavioral. What you buy, where you go, what you search, what you click. Behavior reveals preferences better than surveys.

Social. Who your friends are, what communities you belong to, what content your network shares. Social context shapes influence susceptibility.

Psychological. Your personality traits, your emotional states, your values. These are inferred from behavior—the famous "likes predict personality" research showed that Facebook likes could predict personality traits better than close friends could.

Situational. When you're tired, when you're stressed, when you're vulnerable. Influence is more effective at certain moments. Platforms know your patterns well enough to target those moments.

All of this is combined into targeting algorithms that select which messages reach you. You never see most of the content aimed at your demographic—you see the content aimed at you specifically.

The Techniques

The basic influence techniques are well-documented. Cialdini's Influence cataloged six principles that have stood the test of time:

Reciprocity. When someone gives you something, you feel obligated to give back. Free samples, free content, free services—they create a sense of debt that marketers exploit.

Commitment and consistency. Once you've taken a small action, you're more likely to take larger consistent actions. Get someone to sign a petition, they're more likely to donate later. Start with a small belief shift, larger shifts follow.

Social proof. We do what others do. Testimonials, view counts, "trending" labels—these all signal that others have approved, which shapes our approval.

Authority. We defer to expertise. Credentials, endorsements, expert appearances—these bypass critical evaluation by triggering authority deference.

Liking. We're influenced more by people we like. Attractiveness, similarity, compliments, association with positive things—all increase influence.

Scarcity. Things seem more valuable when they're scarce. Limited time offers, exclusive access, artificial constraints—these trigger fear of missing out.

These principles aren't new. What's new is the precision with which they can be applied. Targeted messaging means the right principle can be applied to the right person at the right moment.

But the toolkit goes beyond Cialdini. Modern influence research has added:

Priming. Exposure to stimuli influences subsequent behavior without conscious awareness. Show someone images of money, they become more selfish in subsequent tasks. The priming happens before the conscious evaluation.

Framing. The same information presented differently produces different judgments. "90% survival rate" and "10% death rate" convey the same information but produce different decisions. Framing is omnipresent in political communication.

Defaults. People tend to stick with default options. Opt-out organ donation programs have massively higher participation than opt-in programs—same choice, different default, different behavior.

Loss aversion. Losses loom larger than gains. Framing messages in terms of what you might lose is more motivating than what you might gain.

Anchoring. The first number presented influences subsequent judgments. High initial prices make subsequent prices seem reasonable. Anchors work even when they're obviously arbitrary.

These techniques are deployed constantly in commercial and political communication. Individually, each produces small effects. Combined and targeted, they're powerful.

The Algorithmic Amplification

Platforms don't just enable targeted messaging—they algorithmically amplify certain content over others. The amplification criteria are engagement metrics: clicks, shares, time spent, comments.

What gets engagement?

Emotional content. Especially outrage, fear, and anxiety. Strong emotions drive action. The algorithm learns that emotional content gets engagement and serves more of it.

Identity-confirming content. Content that affirms your existing beliefs gets engagement. The algorithm serves you more of what you already agree with, creating filter bubbles.

Conflict content. Arguments, drama, controversy—these get attention. The algorithm promotes conflict because conflict is engaging.

Simple content. Nuance is boring. Simple, strong claims get more engagement than complicated truthful ones.

The algorithm doesn't have political goals or ideological preferences. It just optimizes for engagement. But engagement-optimized content tends to be emotional, polarizing, simple, and identity-affirming—regardless of whether it's true or beneficial.

You're not in an information environment. You're in an engagement-maximization environment. And what maximizes engagement isn't what serves your interests.

Why You Don't Notice

If the engineering is so pervasive, why doesn't it feel that way?

Normalization. You grew up with advertising and influence. The background radiation of persuasion doesn't register because it's always been there.

Personalization as service. Targeting is framed as relevance. "We show you things you're interested in." This makes surveillance feel like a benefit.

Subtlety. Modern influence doesn't feel like manipulation. It feels like your own thoughts. The best persuasion doesn't leave fingerprints.

Confirmation. When you see content that confirms your existing views, it feels like validation, not manipulation. You don't notice the filter bubble because it feels like reality.

Sunk cost. You've built your digital life on these platforms. Leaving would be costly. So you minimize the downsides psychologically.

The very effectiveness of influence operations depends on them not being noticed. Obvious manipulation triggers resistance. Subtle manipulation shapes without triggering.

The First Step: Notice

You can't defend against what you can't see. The first step in resistance is simply noticing the influence attempts.

Notice targeting. When content appears perfectly calibrated to your interests, ask why. The relevance isn't magic—it's surveillance plus algorithmic matching.

Notice emotional manipulation. When content triggers strong emotions—outrage, fear, moral indignation—that's often the point. Strong emotions bypass critical thinking. Ask whether the emotional response is appropriate to the facts.

Notice social proof. When you're told that "everyone" believes something, or that something is "trending," that's social proof being deployed. Ask whether the "everyone" is real or manufactured.

Notice scarcity claims. When you're told to act now or miss out, that's scarcity manipulation. Real urgency doesn't usually need to be emphasized; manufactured urgency does.

Notice who benefits. Every piece of content serves someone's interests. Asking "who benefits if I believe this?" is a useful filter.

This isn't paranoia—it's accurate perception. You're surrounded by influence operations. Noticing them is the beginning of maintaining sovereignty over your own mind.

The Takeaway

You're being engineered. This isn't a conspiracy theory—it's the explicit business model of the attention economy.

Psychological profiling plus targeted messaging plus algorithmic amplification creates an influence environment of unprecedented sophistication. The relationship is asymmetric: they know you better than you know them; they coordinate while you're alone; they optimize while you stay static.

The first defense is noticing. See the targeting. Feel the emotional manipulation. Question the social proof. Recognize the scarcity claims. Ask who benefits.

You can't achieve immunity. But you can achieve partial resistance. And partial resistance might be enough to maintain some authorship over your own beliefs.

The Stakes

Why does this matter? Can't people just ignore advertising?

Political manipulation. When the same techniques used to sell soap are applied to elections, democracy itself is compromised. Voters make choices based on targeted manipulation rather than informed deliberation. The 2016 election may have been decided by a few thousand votes in swing states—well within the margin that targeting could influence.

Mental health. The emotional manipulation that drives engagement also drives anxiety, depression, and social comparison. The mental health crisis among young people correlates with social media adoption. The very features that make platforms addictive also make them harmful.

Epistemic collapse. When information environments are optimized for engagement rather than truth, shared reality fragments. People live in different information worlds, exposed to different facts, different framings, different realities. Democracy requires enough shared reality to have meaningful debate—and the engineering is destroying that.

Individual autonomy. At the deepest level, influence engineering threatens the idea that you're the author of your own beliefs and desires. If what you want is shaped by systems optimizing for someone else's interests, in what sense are those wants yours?

The stakes aren't just commercial. They're civilizational.

What Comes Next

This series will trace the history and science of influence—from Bernays through Chomsky through modern misinformation research. We'll explore both offense (how manipulation works) and defense (how to resist).

The goal isn't to make you paranoid. It's to make you literate. You can't opt out of the attention economy. But you can understand how it works. And understanding is the prerequisite for resistance.

The influence engineers have a century of research. You deserve to know what they know.

Further Reading

- Cialdini, R. B. (2006). Influence: The Psychology of Persuasion. Harper Business. - Zuboff, S. (2019). The Age of Surveillance Capitalism. PublicAffairs. - Wu, T. (2016). The Attention Merchants. Knopf.

This is Part 1 of the Propaganda and Persuasion Science series. Next: "Edward Bernays: The Original Spin Doctor"

Comments ()