Sensory Feedback: Restoring Touch

Nathan Copeland lost his arm in a car accident when he was eighteen years old. Ten years later, researchers at the University of Pittsburgh asked him an extraordinary question: would you like to feel again?

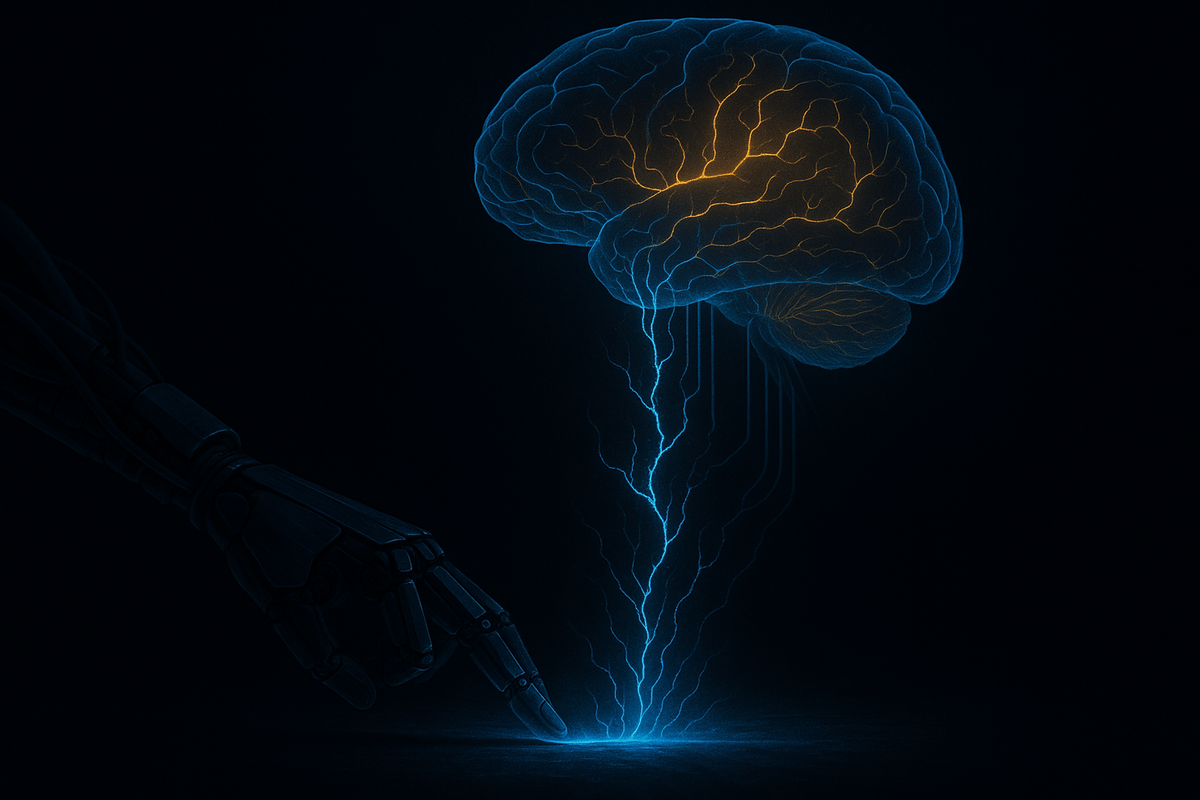

They implanted electrodes in his somatosensory cortex—the region of the brain that processes touch. Then they connected those electrodes to sensors on a robotic hand. When something touched the robot's fingers, the sensors sent signals. The signals were converted to electrical patterns. The patterns were delivered to Nathan's brain.

And he felt it.

"I can feel just about every finger—it's a really weird sensation," Nathan said at the time. "Sometimes it feels electrical, and sometimes it's more of pressure, but for the most part, I can tell which finger is being touched."

This was 2016. The world's first successful bidirectional brain-computer interface: not just reading from the brain, but writing to it.

Motor BCIs let paralyzed people reach out. Sensory BCIs let them feel what they touch.

The Harder Problem

Reading from the brain is hard. Writing to the brain is harder.

When you record from motor cortex, you're intercepting signals that were going to be sent anyway. The brain is already generating the output. Your job is to decode it.

When you stimulate sensory cortex, you're creating signals that didn't exist. You have to figure out the right patterns—the "neural code" for touch—and deliver them in a way the brain can interpret. You're not translating the brain's language. You're speaking it.

And here's the thing: we don't actually know the neural code for touch. Not precisely. We know roughly which areas process which body parts. We know that different stimulation patterns produce different sensations. But mapping from physical touch to neural pattern is not a solved problem.

Researchers are essentially experimenting. They stimulate electrodes and ask patients: what do you feel? Where do you feel it? Does this feel different from that? Through thousands of trials, they build a vocabulary—a mapping between electrode activation and perceived sensation.

We're teaching the brain a new language for touch. It's working, but we're still learning the grammar.

What Sensory Feedback Feels Like

Nathan Copeland has described the sensations in detail over years of research.

The feelings are localized—he can tell which finger is being stimulated. But they don't feel exactly like normal touch. Sometimes it's pressure. Sometimes it's tingling, almost electrical. Sometimes it's hard to describe, a sensation that doesn't quite map to words because it's genuinely novel.

The brain is doing something remarkable here: it's integrating artificial signals into embodied experience. Nathan feels the touch as if it were on his missing hand—not as a stimulus in his brain, but as a sensation in the phantom limb that his somatosensory cortex still represents.

This is the same principle that makes phantom limb pain possible. The brain maintains a body map even when the body part is gone. Stimulate the right neurons, and the brain interprets the signal as coming from that absent limb.

Prosthetic touch plugs into this existing architecture. It doesn't create a new sense—it activates an existing one.

Over time, the sensations have become more natural and more useful. Nathan has learned to use the feedback to modulate his grip force when controlling the robotic hand. He can pick up fragile objects without crushing them because he feels how hard he's gripping.

Touch isn't just about sensation. It's about control.

The Closed Loop

Here's why bidirectional interfaces matter: motor control without sensory feedback is terrible.

Try this: close your eyes and touch your nose. Easy, right? Now imagine you couldn't feel your arm at all. You'd have to watch constantly to know where it was. Every movement would require visual attention and conscious correction.

That's what motor BCIs are like without sensory feedback. Patients can control robotic arms, but they have to watch them continuously. They can't feel when they've grasped an object, can't tell how hard they're squeezing, can't sense when something is slipping. The control is open-loop—output without input.

Close the loop—add sensory feedback—and control improves dramatically.

Robert Gaunt's team at Pittsburgh demonstrated this directly. Nathan Copeland performed better on motor tasks when sensory feedback was enabled compared to when it was disabled. He was faster, more accurate, and more confident.

This makes intuitive sense. Your natural motor control depends on constant sensory feedback—you're not aware of most of it, but it's there, continuously updating your motor commands. A BCI that provides both directions of information flow comes closer to how the brain naturally operates.

Reading from the brain gives you control. Writing to the brain gives you presence.

The Technical Challenges

Let's get specific about why sensory stimulation is so hard.

Spatial resolution. Touch is precise. You can tell the difference between stimulation on adjacent fingertips. But electrodes in the brain can't target individual neurons—they affect populations of cells. Getting fine-grained spatial sensation requires precise electrode placement and sophisticated stimulation patterns.

Temporal patterns. Natural touch isn't static. When you slide your finger across a surface, there's a complex pattern of activation over time. Replicating this requires stimulating in temporal patterns that the brain interprets as movement, texture, temperature. Current systems are primitive in this regard.

Intensity coding. How hard are you pressing? How heavy is the object? Intensity information is coded in the firing rates and patterns of sensory neurons. Matching stimulation to create accurate intensity perception is an ongoing challenge.

Perceptual stability. The sensations need to be consistent. The same stimulation should produce the same feeling every time. But brain states change—arousal, attention, adaptation effects—and what a particular stimulation pattern feels like can vary.

Long-term viability. Stimulating electrodes can degrade faster than recording electrodes. The electrical current affects tissue in ways that are not fully understood. Whether sensory electrodes will remain effective over years is not yet proven.

Researchers are addressing these challenges incrementally. The field is advancing. But bidirectional interfaces remain less developed than motor-only systems.

Beyond Touch

Sensory restoration isn't limited to touch. Vision prosthetics are in development (rudimentary phosphenes, not images yet). Cochlear implants are the success story—over a million recipients, covered in the next article. Proprioception and balance are early-stage targets.

Each sensory modality has its own neural code, its own challenges. But the principle is the same: figure out what patterns of stimulation the brain interprets as meaningful sensation, and deliver them.

The Phantom Limb Connection

Here's something fascinating: sensory restoration work has revealed deep things about how the brain represents the body.

Phantom limbs are the subjective experience of a limb that no longer exists. Amputees often feel their missing hand, sometimes in painful ways. This happens because the somatosensory cortex still contains a representation of that limb—the neurons are still there, still active, still generating sensory experiences.

Sensory BCIs plug into this preserved representation. When you stimulate the area of somatosensory cortex that encoded the index finger, the patient feels sensation in the phantom index finger—even years after the amputation.

This tells us something profound: the body map in the brain is remarkably durable. It persists even without input from the body. And it can be reactivated.

Some researchers are exploring whether sensory stimulation could help with phantom limb pain. If the phantom limb is painful because the brain's representation is confused or hyperactive, could proper sensory input help recalibrate it? Early results are mixed but intriguing.

The brain hasn't forgotten the body. It's waiting for input.

The Patients

Let me introduce you to some of the people pioneering sensory restoration.

Nathan Copeland (Pittsburgh, 2016-present) has the most extensive experience with bidirectional BCIs. Over eight years, he's helped researchers refine the stimulation protocols, describe the sensations in detail, and demonstrate functional improvements. He's a genuine collaborator, not just a research subject.

Dustin Tyler's patients (Case Western Reserve) have experienced touch through peripheral nerve stimulation rather than cortical stimulation. Electrodes wrapped around the nerves in the residual arm deliver sensation when sensors on the prosthetic hand detect touch. These patients report that the prosthetic limb feels more like part of their body—less like a tool, more like an extension of self.

Dennis Aabo Sørensen (Swiss/Italian consortium, 2013) was an early recipient of sensory feedback through peripheral nerve stimulation. He was able to distinguish between different textures and shapes using the sensory signals. "It's incredible," he said. "I can feel things that I haven't felt in a long time."

These experiences are consistent: sensory feedback makes prosthetics feel more real, more usable, more like part of the person.

What's Coming

The field is moving toward fully integrated bidirectional systems.

Higher-resolution stimulation. More electrodes, better positioned, with more sophisticated patterns. The goal is to recreate something closer to natural touch rather than the crude approximations currently possible.

Peripheral nerve approaches. For amputees, stimulating the remaining nerves in the residual limb may be easier than implanting electrodes in the brain. Multiple groups are pursuing this path.

Texture and temperature. Current systems provide basic touch. Future systems might convey texture information (rough vs. smooth), temperature (hot vs. cold), and proprioceptive feedback (where the limb is in space).

Home use. Research systems require lab visits and constant supervision. The goal is to make sensory feedback available in daily life—fully implanted, wireless, and reliable.

Integration with advanced prosthetics. The prosthetic arms themselves are getting better—more dexterous, more natural. Combining advanced prosthetics with advanced sensory feedback could produce systems that approach natural limb function.

The Full Circle

Let's step back and see the complete picture.

Motor BCIs let paralyzed people reach out into the world. Sensory BCIs let them feel what they touch. Together, they restore something approaching embodied action—the seamless loop of intention, movement, sensation, and adjustment that characterizes normal motor function.

This is more than technological achievement. It's the reconstitution of agency.

Nathan Copeland can shake hands—really shake hands, feeling the grip, modulating the pressure, experiencing the contact. It's not the same as having his original body. But it's vastly more than nothing.

Every bidirectional interface patient is a proof of concept for something we're only beginning to understand: the brain can incorporate artificial systems into the body schema. It can treat machines as extensions of self. The boundary between biological and technological is not as sharp as we assumed.

Sensation is what makes action feel real. We're giving that back.

The journey from motor BCI to bidirectional BCI to something approaching full sensory restoration is long. But the direction is clear, and the patients already living with these technologies prove it's possible.

We're teaching machines to touch—and teaching brains to feel them do it.

The Path Forward

Bidirectional BCIs currently cost millions per patient—research grants absorb this, not healthcare systems. For the technology to scale, we need manufacturing scale (mass-produced electrodes), simplified surgery, and eventually insurance coverage. Cochlear implants took decades to become routine. Bidirectional BCIs are probably on a similar timeline.

The technology works. The delivery system doesn't exist yet.

Where does it go from here? Better stimulation patterns. More sensory modalities—proprioception, temperature, texture. Eventually, perhaps, full sensory restoration for any modality.

The path that leads there starts with Nathan Copeland feeling a robotic finger.

Every sense we restore opens a door. Behind each door are implications we can barely imagine.

Comments ()