Why Smart People Believe: Motivated Reasoning

Sir Arthur Conan Doyle created Sherlock Holmes—the most rational detective in literary history. A man who solved crimes through pure logic, who dismissed sentiment, who famously said "when you have eliminated the impossible, whatever remains, however improbable, must be the truth."

Conan Doyle also believed in fairies.

In 1920, two young girls in Yorkshire produced photographs showing themselves with tiny winged creatures. Conan Doyle, then 61 and world-famous, examined the photographs and declared them genuine. He wrote articles. He published a book. He defended the Cottingley Fairies until his death.

The photographs were obvious fakes—paper cutouts propped up with hatpins. The girls admitted the hoax in the 1980s. But the creator of Sherlock Holmes couldn't see what any skeptical observer could see.

Intelligence doesn't protect against motivated cognition. Sometimes it makes things worse.

The Sophistication Trap

Here's what people get wrong about irrational belief: they assume it's a knowledge problem.

"If people just understood how statistics work, they wouldn't believe in astrology."

"If people understood cold reading, they wouldn't go to psychics."

"If people were properly educated, they'd be skeptical."

But Conan Doyle was educated. He was a physician. He understood evidence. He created a character whose entire appeal was rigorous deduction.

The problem wasn't that he couldn't think. The problem was what he wanted to believe.

His son, his brother, and many friends had died in World War I. He was grieving. He desperately wanted evidence that death wasn't final—that consciousness continued—that he might communicate with the lost.

Spiritualism offered that hope. And once you want something to be true badly enough, intelligence becomes a tool for believing it rather than evaluating it.

Motivated Reasoning: The Mechanism

Motivated reasoning is the tendency to arrive at conclusions we want to arrive at, using reasoning that feels rigorous.

It works through several processes:

Selective attention: We notice evidence that supports what we want to believe and overlook evidence that contradicts it. Conan Doyle noticed details in the photographs that seemed authentic; he didn't notice the details that revealed the hoax.

Asymmetric scrutiny: We evaluate desired conclusions with "can I believe this?" (seeking permission) and undesired conclusions with "must I believe this?" (seeking escape). The evidential bar is different.

Sophisticated rationalization: Intelligence provides more ways to explain away inconvenient evidence. "Those aren't paper cutouts—they're etheric materializations that happen to resemble paper." More cognitive resources mean more elaborate defenses.

Source credentialing: We evaluate sources based on whether we like their conclusions. Skeptics debunking the fairies became biased materialists. Supporters became open-minded investigators.

This isn't a failure of reasoning. It's reasoning directed toward a goal other than truth.

The IQ Paradox

There's a disturbing finding in cognitive science: on politically charged topics, higher IQ doesn't correlate with more accurate beliefs. Sometimes it correlates with less accurate beliefs.

Why? Because smart people are better at generating arguments for whatever they already believe. They can find flaws in opposing evidence. They can construct sophisticated justifications. They can dismiss inconvenient data with technical objections.

The physicist who becomes a climate skeptic doesn't lack the intelligence to understand climate science. They have enough intelligence to construct alternative frameworks, to identify real uncertainties and exaggerate their significance, to marshal credentials in a motivated direction.

Intelligence is a tool. Tools serve purposes. If your purpose is truth-seeking, intelligence helps you find truth. If your purpose is defending existing beliefs, intelligence helps you defend them.

Belief Maintenance Machinery

Once a belief is established, multiple mechanisms protect it:

Identity fusion: The belief becomes part of who you are. Abandoning it feels like abandoning yourself. Astrological identity, religious identity, political identity—these aren't just opinions. They're self-concepts.

Social embedding: Your community shares the belief. Changing it means risking relationships, status, belonging. The social cost of updating can exceed the social cost of being wrong.

Sunk costs: You've invested in the belief—time, money, reputation. Admitting error means admitting that investment was wasted.

Worldview coherence: The belief connects to other beliefs. Changing one threatens the whole structure. If astrology is false, what about the other intuitions you trusted? If this psychic was fake, what about the meaningful experience you had?

These mechanisms operate automatically. You don't consciously decide to protect your beliefs. The protection happens below awareness, before explicit reasoning begins.

The Intelligent Believer Profile

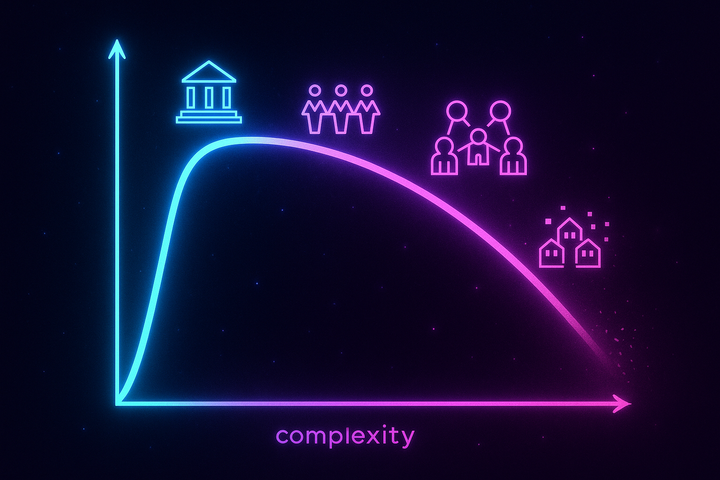

Who believes in astrology, psychics, alternative medicine, conspiracy theories?

The stereotype is the uneducated, the unsophisticated, the rubes. But the data tells a different story.

Astrology belief correlates positively with education in young women. Alternative medicine use is highest among upper-middle-class professionals. Conspiracy thinking appears across all education levels, just with different conspiracy theories.

The intelligent believer often has a specific profile:

- High openness to experience (correlated with both creativity and unusual beliefs) - Need for meaning (seeking patterns and significance) - Intuitive cognitive style (trusting gut feelings over systematic analysis) - Distrust of institutions (mainstream science feels like an establishment to question) - Experience of genuine anomalies (unusual personal experiences that need explanation)

These aren't deficits. Openness correlates with creativity. Meaning-seeking correlates with purpose. Intuition correlates with social intelligence. Institutional skepticism correlates with independent thinking.

The traits that enable unusual belief also enable unusual achievement.

The Anomaly Problem

Many believers in paranormal phenomena point to personal experiences that feel impossible to explain conventionally.

"I knew my grandmother died before anyone told me."

"I had a dream that came true in exact detail."

"The psychic told me something she couldn't have known."

These experiences are common. Surveys suggest most people have had at least one experience that felt supernatural—a premonition, a coincidence too perfect to be chance, a sense of presence.

The skeptic's dismissal—"you're just remembering hits and forgetting misses"—is technically accurate but emotionally inadequate. The experience felt meaningful. The experience felt real. Saying "it was confirmation bias" doesn't address the phenomenology.

Smart believers often reason from genuine anomalous experiences. They're not credulous idiots. They're people trying to explain something that actually happened to them.

The question is whether the paranormal explanation is the best explanation—and motivated reasoning biases that evaluation.

Why Education Doesn't Inoculate

If you've been educated, you know things. But knowing things doesn't protect against believing other things.

Education teaches content, not process. You learn that the Earth is 4.5 billion years old. You don't necessarily learn how to evaluate claims in general, how to recognize your own biases, how to sit with uncertainty.

Education creates false confidence. The more you know, the more you trust your judgment—including when your judgment is compromised by motivation.

Education is domain-specific. A physicist is an expert in physics. That doesn't make them an expert in evaluating psychic claims, alternative medicine, or historical conspiracies. But the credential suggests general competence.

Education selects for certain biases. The academic environment rewards finding patterns, constructing arguments, defending positions. These skills transfer to belief defense.

The educated believer isn't failing to use their education. They're applying it—toward ends other than objective truth assessment.

The Meaning Market

Here's the deeper issue: humans need meaning, and rationality doesn't always provide it.

The scientific worldview offers accuracy but not always significance. It explains how things work, not why they matter. It dissolves teleology, leaving a universe of causes without purposes.

Divination systems offer meaning. Astrology says your life is connected to cosmic patterns. Psychic readings say deceased loved ones are still present. Tarot says your situation has archetypal significance.

Belief in these systems isn't just cognitive error. It's meaning-seeking behavior.

The smart believer often explicitly trades accuracy for meaning. "I know astrology isn't scientifically validated. But it gives me a framework for understanding myself. It feels true even if it isn't literally true."

This is philosophically coherent, even if epistemologically problematic. If you value meaning and the meaning system isn't harming anyone, why should scientific accuracy trump psychological utility?

The Costs

But meaning systems have costs:

Financial exploitation: People pay thousands of dollars to fraudulent psychics. Grief-stricken families empty bank accounts for fake communication with the dead.

Medical harm: People choose alternative medicine over effective treatment. Children die of treatable diseases because parents trusted faith healers.

Social dysfunction: Conspiracy beliefs erode trust in institutions that coordinate society. Vaccine hesitancy, election denial, climate inaction.

Epistemic degradation: If feelings of truth trump evidence of truth, the whole apparatus of knowledge generation becomes unreliable.

These harms are real. Meaning-seeking doesn't justify them. The challenge is how to meet the meaning need without the associated costs.

The Rationalist's Blind Spot

Hardcore skeptics often miss something: rationality has its own motivated reasoning.

The skeptic who needs paranormal claims to be false will be hypercritical of supporting evidence and undercritical of dismissive explanations. The scientist invested in methodological naturalism may reject anomalies too quickly.

The difference between rationalist motivated reasoning and believer motivated reasoning is mainly in what's being protected. Rationalists protect their identity as rational people. Believers protect their beliefs.

True epistemic humility recognizes the universal vulnerability. Not "I'm rational and they're not" but "we're all navigating the tension between what we want to believe and what the evidence supports."

What Actually Helps

If education doesn't inoculate against motivated belief, what does?

Practice identifying motivated reasoning in yourself. Not in others—that's easy. In yourself, regarding your cherished beliefs. What would change your mind? If nothing would, you're not holding a belief; you're holding an identity.

Cultivate negative capability. The ability to sit with uncertainty without reaching for premature closure. Not knowing is uncomfortable. Learning to tolerate the discomfort reduces the pressure to believe.

Separate meaning from truth. You can find a framework meaningful without claiming it's literally true. Tarot can be useful without being magic. Astrology can be fun without being astronomy.

Build social structures that reward updating. If your community punishes changing your mind, you won't change your mind. If it celebrates updating on evidence, you might.

Address the underlying needs. If someone believes in an afterlife because they're grieving, addressing the grief may be more effective than attacking the belief.

Intelligence doesn't protect against motivated reasoning. But awareness of motivated reasoning—and compassion for the needs that drive it—creates at least the possibility of clearer seeing.

Further Reading

- Kahan, D. M. et al. (2012). "The polarizing impact of science literacy and numeracy on perceived climate change risks." Nature Climate Change. - Mercier, H. & Sperber, D. (2017). The Enigma of Reason. Harvard University Press. - Kahneman, D. (2011). Thinking, Fast and Slow. Farrar, Straus and Giroux.

This is Part 7 of the Divination Systems series. Next: "Secular Divination: Personality Tests and Algorithms."

Comments ()