Superforecasting: What Makes Good Predictors

In 2011, the Intelligence Advanced Research Projects Activity (IARPA) launched the largest forecasting tournament in history. They recruited thousands of volunteers to predict geopolitical events: Would North Korea conduct another nuclear test? Would the euro crisis escalate? Would Assad fall?

The volunteers competed against each other and, crucially, against professional intelligence analysts with classified information. You'd expect the analysts to win. They had the briefings, the satellite imagery, the human intelligence that ordinary citizens could never access.

The analysts lost. A subset of the volunteers—eventually called "superforecasters"—beat the intelligence community by 30%. They had no security clearance, no special data, no institutional resources. They were just regular people who happened to be exceptionally good at updating beliefs in response to evidence.

Philip Tetlock, the researcher who ran the tournament, spent years studying what made them different. His findings are uncomfortable for anyone who relies on expert opinion.

Hedgehogs and Foxes

Tetlock's interest in forecasting started earlier, with a different study. In 1984, he began collecting predictions from 284 experts—academics, policy analysts, journalists—on political and economic questions. He followed them for two decades.

The results were brutal. Experts barely beat random chance. Dart-throwing chimps would have done almost as well. And the most confident experts—the ones who appeared on TV, who wrote op-eds, who had big theories—performed worst of all.

Tetlock classified experts into two types, borrowing from Isaiah Berlin's famous essay:

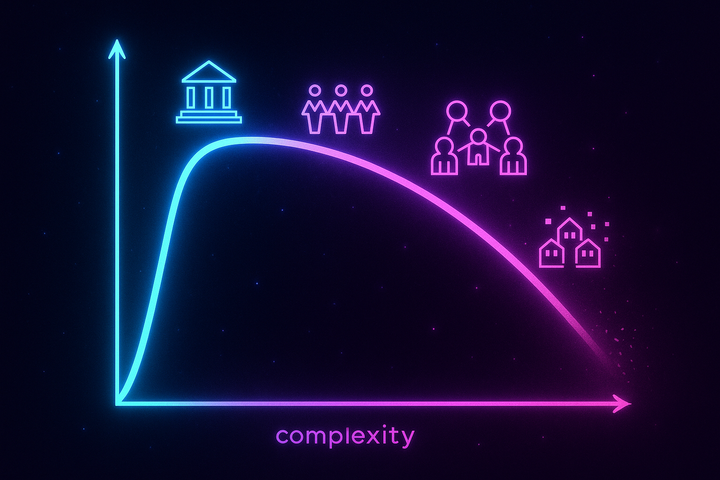

Hedgehogs know one big thing. They have a theory, a framework, a lens through which they see everything. Marxists, free-market fundamentalists, realist international relations scholars—they explain events through their master framework.

Foxes know many things. They're eclectic, drawing on multiple frameworks without committing fully to any. They're comfortable with complexity and contradiction. They're more likely to say "it depends" and less likely to offer confident predictions.

Foxes crushed hedgehogs. The experts with grand theories were worse than those who muddled through with multiple perspectives. Confidence and coherence were inversely related to accuracy. The people who sounded best on TV were the worst at actually predicting what would happen.

This finding was unwelcome. We want experts to have answers. We reward confidence and punish hedging. But reality doesn't care what we want. The world is complex, and people who acknowledge that complexity predict it better than people who force it into simple frameworks.

What Superforecasters Do

The IARPA tournament gave Tetlock a new dataset: not just how experts perform, but how exceptional forecasters think. He identified practices that distinguished superforecasters from the rest:

They Think in Probabilities

Ordinary forecasters speak in vague terms—"likely," "possible," "could happen." These words mean different things to different people. Does "likely" mean 60% or 90%?

Superforecasters think in numbers. They say "65%," then update to "72%" as new information arrives. The precision matters. It forces them to quantify uncertainty instead of hiding behind fuzzy language.

It also creates accountability. If you predict something at 70% and it happens, you were right. If you predict it at 70% and it doesn't happen, you were still right—30% events should fail about a third of the time. Over many predictions, your calibration becomes measurable. Precision enables learning.

They Update Frequently

Many forecasters make a prediction and stick with it. They've committed; changing their mind would feel like admitting error.

Superforecasters update constantly. New poll comes out? Update. Policy announcement? Update. Subtle shift in rhetoric? Update. They're always asking: "Given what I now know, should my probability estimate change?"

The key insight is that updating isn't flip-flopping. It's rationality. The world provides information; your beliefs should respond to that information. Refusing to update because you don't want to look uncertain is a bias, not a virtue.

They Break Problems into Components

Big questions are hard. "Will Russia invade Ukraine?" is an overwhelming question with too many variables.

Superforecasters decompose. What's the probability Russia masses troops on the border? Given troop buildup, what's the probability of an actual invasion? Given invasion, what's the probability of success? Each sub-question is more tractable than the whole.

This is Fermi estimation applied to geopolitics. You might be wildly wrong about each component, but if your errors are uncorrelated, the overall estimate is often surprisingly accurate. Breaking problems down forces you to identify the key uncertainties and think carefully about each.

They Seek Out Disconfirming Evidence

Confirmation bias is the tendency to seek evidence that supports what you already believe. It's one of the most robust findings in cognitive psychology—and superforecasters actively fight it.

They read sources that disagree with their initial estimate. They steelman the opposing view. They ask: "What would have to be true for me to be wrong?" They treat their current belief as a hypothesis to be tested, not a position to be defended.

This is cognitively unpleasant. It's easier to surround yourself with agreement. But superforecasters optimize for accuracy, not comfort.

The technical term is "active open-mindedness." It's not just being willing to consider other views when they're presented. It's actively seeking them out—hunting for evidence that might prove you wrong. Most people don't do this. Superforecasters make it a habit.

They're Humble But Not Diffident

Superforecasters don't claim certainty they don't have. They freely admit uncertainty. They know their estimates are fallible.

But they're not paralyzed by uncertainty. They still make predictions. They still take positions. They just hold those positions lightly, ready to update when evidence warrants.

This is a specific cognitive style: confident enough to act, humble enough to revise. It's rarer than it sounds. Most people are either overconfident (won't update) or underconfident (won't commit). Superforecasters thread the needle.

The Good Judgment Project

Tetlock's tournament produced actionable findings. He organized the best performers into teams, and those teams outperformed individual superforecasters.

Why? The teams combined diverse perspectives (error cancellation) with structured deliberation (information sharing). Team members challenged each other's reasoning without social pressure to conform. They updated in response to teammates' arguments.

The result was collective intelligence in action. The team was smarter than any individual member.

But team structure mattered. When teams converged too quickly, they lost diversity. When members deferred to perceived experts, independence collapsed. The best teams maintained productive disagreement while still reaching consensus.

Tetlock identified specific practices that made teams effective: rotating which member led discussion, requiring explicit probability estimates from everyone before deliberation, tracking and celebrating calibration over time. The infrastructure of good forecasting is designable.

What Superforecasting Isn't

Some clarifications:

It's not about intelligence. Superforecasters are smart, but intelligence alone doesn't predict performance. Many smart people are terrible forecasters—they have elaborate theories that don't track reality. Cognitive style matters more than raw horsepower.

It's not about domain expertise. Subject-matter experts don't reliably outperform intelligent generalists. Sometimes expertise helps. Often it creates blind spots. The superforecasters who predicted Middle East events weren't Middle East scholars—they were careful thinkers who did their research.

It's not about prediction markets. Superforecasters can beat markets, at least in low-liquidity markets. The IARPA tournament showed that the best individuals consistently outperformed the tournament's internal prediction market. Aggregation mechanisms help, but they don't replace good individual judgment.

It's not about access to information. Superforecasters had no classified intelligence. They used publicly available information—news articles, academic research, statistical databases. What distinguished them wasn't what they knew but how they processed what everyone knows.

This is the most surprising finding. The intelligence community has billions of dollars of collection capabilities. The superforecasters had Google. And the superforecasters won. It suggests that the bottleneck in geopolitical prediction isn't information—it's processing. We're drowning in data and starving for wisdom.

The Limits of Superforecasting

Superforecasting works for certain kinds of questions:

Time-bound questions with clear resolution criteria. "Will X happen by Y date?" can be scored. "Was X good for society?" cannot.

Questions where base rates and evidence are available. If you know that 10% of similar countries have had coups in similar circumstances, that's useful. If every situation is genuinely unprecedented, base rates don't help.

Questions where the relevant information is accessible. Superforecasters can analyze public information well. They can't access what's in a dictator's head or predict genuine black swans.

For long-term, open-ended, unprecedented questions, superforecasting techniques help but don't guarantee accuracy. The further out you go, the more uncertainty compounds. Tetlock himself emphasizes that superforecasting works best for 3-18 month time horizons.

There's also the "unknown unknowns" problem. Superforecasters are good at weighting known factors. They're not good at anticipating factors that haven't been considered. Black swans—truly surprising events—remain difficult to predict by definition. Superforecasting improves our odds; it doesn't eliminate uncertainty.

The Uncomfortable Implication

Tetlock's research suggests that the expert commentators we listen to—the pundits, the analysts, the op-ed writers—are often worse than chance.

This isn't because they're stupid. It's because the incentives are wrong. Television rewards confidence, not calibration. Newspapers want vivid takes, not hedged probabilities. The experts who rise to prominence are the hedgehogs—confident, articulate, wrong.

Meanwhile, the best forecasters are boring. They speak in probabilities. They hedge. They update. They don't have grand theories. They don't make good TV.

We've built media and institutional systems that select against accuracy. The people we listen to are optimized for attention, not truth. The people who would actually help us understand the future are too boring to get airtime.

The solution isn't to find better pundits. It's to change what we reward. Track records. Calibration scores. Brier scores. The technology exists. The question is whether we care enough to use it.

Imagine a world where every pundit had a public calibration score. Where newspapers listed forecasters' track records next to their bylines. Where we could see, transparently, who actually knew what they were talking about. We have the data to do this. We just don't do it—because it would embarrass too many important people.

The Takeaway

Superforecasters are regular people who follow specific practices: thinking in probabilities, updating frequently, breaking problems into components, seeking disconfirming evidence, and holding beliefs with appropriate confidence.

These practices can be taught. Teams of superforecasters consistently outperform both individuals and intelligence analysts. The techniques of good forecasting are knowable and implementable.

The gap between how we currently make predictions and how we could make them is enormous. We listen to confident experts who are reliably wrong. We could instead track calibration, reward accuracy, and learn who actually knows what they're talking about.

The forecast for whether we'll do this is uncertain. But the evidence suggests we should.

Further Reading

- Tetlock, P. E. (2005). Expert Political Judgment: How Good Is It? How Can We Know? Princeton University Press. - Tetlock, P. E., & Gardner, D. (2015). Superforecasting: The Art and Science of Prediction. Crown. - Mellers, B., et al. (2014). "Psychological Strategies for Winning a Geopolitical Forecasting Tournament." Psychological Science.

This is Part 4 of the Collective Intelligence series. Next: "Groupthink"

Comments ()