Hybrid Retrieval: Combining Vectors and Graphs

Hybrid Retrieval: Combining Vectors and Graphs

Series: Graph RAG | Part: 7 of 10

Neither vector search nor graph traversal alone is sufficient for production AI agents.

Vector search casts a wide net—it finds semantically similar content even when terminology varies. But it misses structured relationships and fails at multi-hop reasoning.

Graph traversal follows precise relationships—it captures dependencies, hierarchies, and causal chains. But it requires exact matches to starting entities and can't handle semantic ambiguity.

The solution isn't choosing one or the other. It's combining them.

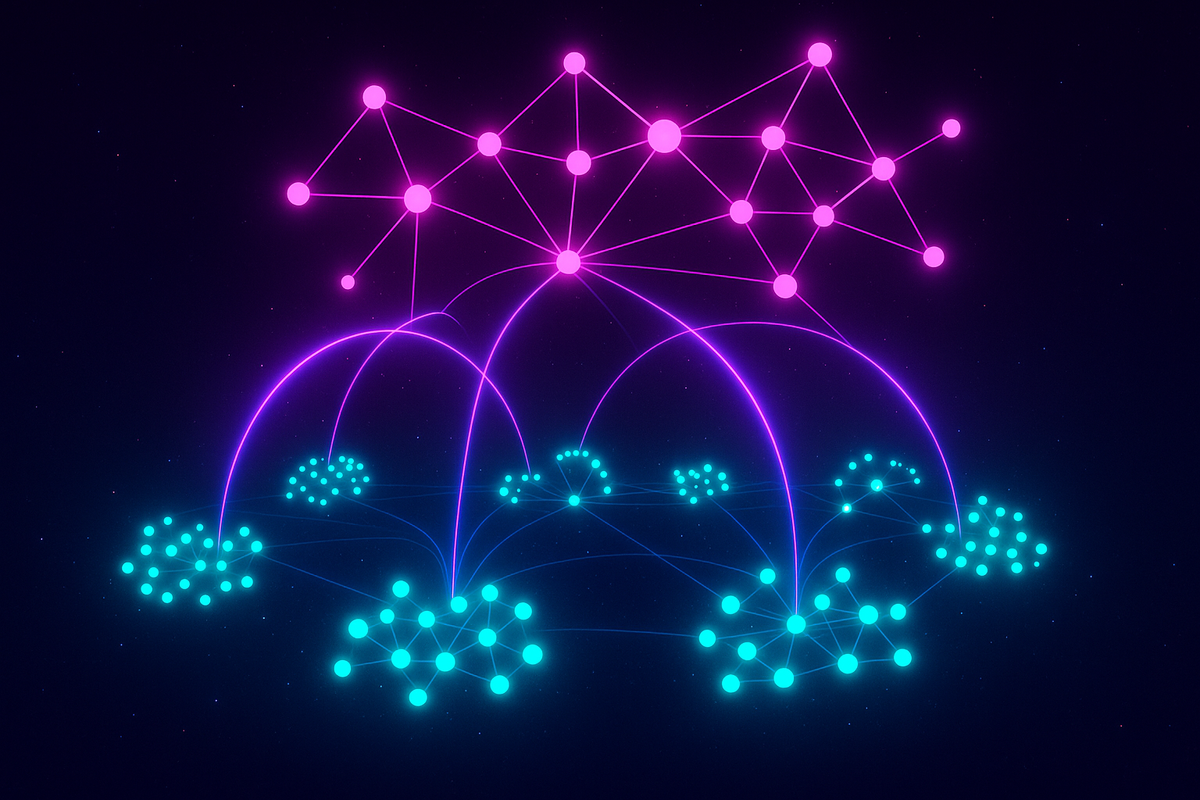

Hybrid retrieval uses vector similarity to find starting points, then graph traversal to explore relationships, then vector ranking to score results. It's the best of both approaches: the recall of vector search with the precision of graph structure.

This is how production Graph RAG systems actually work.

The Failure Modes of Pure Approaches

Pure Vector Search

Strength: Recall through semantic similarity

- Handles paraphrase and terminology variation

- Works when you don't know exact entity names

- Covers long tail of related but not explicitly mentioned concepts

Weakness: No structural reasoning

- Can't follow dependency chains

- Misses transitive relationships

- Conflates semantic similarity with logical connection

Example failure:

Query: "What services would break if we remove the auth system?"

Vector search finds documents mentioning auth and services, but can't traverse the dependency graph to find indirect dependencies.

Pure Graph Traversal

Strength: Precise structural reasoning

- Follows relationship chains correctly

- Captures transitive dependencies

- Respects relationship types and directionality

Weakness: Requires exact entity matches

- Fails if user query doesn't match entity names

- Can't handle semantic similarity

- Brittle to terminology variation

Example failure:

Query: "What depends on the login service?"

Graph has an entity "Authentication Service"—not "login service." Pure graph traversal finds nothing because the starting entity doesn't match.

The Hybrid Architecture

Stage 1: Vector-Based Entity Grounding

Use vector similarity to map the user's query to graph entities:

Query: "What happens if we remove the login system?"

1. Embed the query

2. Embed all entity labels + descriptions

3. Find top-K entities by cosine similarity

→ "Authentication Service" (0.92)

→ "Session Management" (0.78)

→ "User Login API" (0.71)

This step grounds the query in the knowledge graph. Even if the user says "login system" and the graph has "Authentication Service," vector similarity bridges the gap.

Key insight: Vector similarity is excellent for entity linking. It handles synonyms, abbreviations, and paraphrase naturally.

Stage 2: Graph-Based Relationship Exploration

Once you have starting entities, traverse the graph to find connected information:

Starting entity: Authentication Service

Graph traversal:

1. Find all services with DEPENDS_ON edges pointing to Authentication Service

2. Find all configurations with USES edges

3. Find all documentation with DESCRIBES edges

4. Optionally: follow transitive dependencies (services depending on services that depend on auth)

This step provides structure. You're not searching for similar text—you're following explicit relationships encoded in the graph.

Key insight: Graph traversal gives you structured paths: entity → relationship → entity. This is what enables multi-hop reasoning.

Stage 3: Vector-Based Result Ranking

You have a subgraph of relevant entities and relationships. Now retrieve the actual text and rank by relevance:

Retrieved subgraph:

- Authentication Service

- 12 dependent services

- 5 configuration files

- 8 documentation pages

For each retrieved entity:

1. Fetch associated text chunks

2. Compute vector similarity to original query

3. Rank by combined score: graph distance + vector similarity

This step ensures the final results are semantically relevant to the user's intent, not just structurally connected.

Key insight: Vector similarity provides final ranking. Not all connections are equally relevant—surface the ones semantically closest to the query.

Scoring and Fusion Strategies

How do you combine graph distance and vector similarity into a single relevance score?

Weighted Linear Combination

score = α × vector_similarity + (1-α) × graph_relevance

where:

vector_similarity = cosine(query_embedding, chunk_embedding)

graph_relevance = 1 / (1 + path_distance)

Tune α based on your use case:

- α = 0.7: Prioritize semantic similarity (good for exploratory queries)

- α = 0.3: Prioritize graph structure (good for dependency analysis)

Reciprocal Rank Fusion

Rank results separately by vector similarity and graph distance, then combine ranks:

RRF_score = Σ (1 / (k + rank_i))

where:

rank_i = position in ranked list from method i

k = constant (usually 60)

This approach doesn't require tuning weights—it treats both signals as equally important and fuses their rankings.

Graph-Weighted Vector Scores

Use graph structure to boost semantically similar results that are also well-connected:

score = vector_similarity × (1 + log(degree(entity)))

where:

degree(entity) = number of edges connected to the entity

High-degree entities (central nodes in the graph) get a boost. This surfaces well-connected concepts that are also semantically relevant.

Query Patterns and Hybrid Strategies

Different query types benefit from different hybrid strategies:

Exploratory Queries: "What is X?"

Strategy: Vector-heavy with graph expansion

- Vector search to find documents about X

- Light graph traversal to find directly related entities

- Vector rank results

The query is about content, not structure. Use graphs to expand slightly beyond exact matches, but prioritize semantic relevance.

Dependency Queries: "What depends on X?"

Strategy: Graph-heavy with vector grounding

- Vector search to identify X in the graph

- Graph traversal to follow DEPENDS_ON edges

- Return structured results with minimal vector ranking

The answer is structural. Vector search only grounds the starting entity—everything else is pure graph traversal.

Synthesis Queries: "How do X and Y relate?"

Strategy: Balanced hybrid

- Vector search to identify entities X and Y

- Graph traversal to find paths connecting them

- Vector rank paths by semantic relevance to original query

You need structure (the path) and semantics (which path best matches the user's intent).

Impact Analysis: "What happens if we change X?"

Strategy: Graph traversal with vector filtering

- Vector search to identify X

- Graph traversal to find transitive dependencies

- Vector filter results to those mentioning relevant impact terms (failure, break, affect)

Structure drives the answer (what's connected) but semantics filters noise (only surface entities where the documentation discusses impact).

Implementation Patterns

Pattern 1: Two-Database Architecture

Store vectors and graph separately:

- Vector database (Pinecone, Weaviate, Qdrant): entity embeddings, chunk embeddings

- Graph database (Neo4j, TigerGraph, Neptune): entities, relationships, structure

Query pipeline:

- Vector DB: Find entities similar to query

- Graph DB: Traverse from those entities

- Vector DB: Rank retrieved chunks

- Combine and return

Tradeoff: Two databases means more infrastructure, but each is optimized for its task.

Pattern 2: Unified Embedding-Augmented Graph

Store both graph structure and embeddings in a single graph database:

Node: Authentication Service

Properties:

- name: "Authentication Service"

- embedding: [0.23, -0.45, 0.67, ...]

- description: "Handles user login and session management"

Edges: DEPENDS_ON, CALLS, CONFIGURED_BY

Query directly in graph DB using both structural queries and vector similarity.

Tradeoff: Simpler infrastructure but graph databases aren't optimized for vector similarity search—slower than dedicated vector DBs.

Pattern 3: Vector Index Over Graph Projections

Build vector embeddings for subgraphs or paths:

For each entity:

Embed(entity description + immediate neighbors + relationship types)

This creates "contextual embeddings" that include graph structure. Vector search now captures some structural information.

Tradeoff: Less precise than pure graph traversal but enables fast approximate structural search.

Real-World Example: Technical Documentation Search

User query: "How do I configure rate limiting for the API gateway?"

Hybrid pipeline:

1. Vector search over entity embeddings:

Query: "rate limiting API gateway configuration"

Results:

- API Gateway Service (0.89)

- Rate Limiting Module (0.84)

- Configuration Management (0.72)

2. Graph traversal from top entities:

API Gateway Service -[USES]-> Rate Limiting Module

Rate Limiting Module -[CONFIGURED_BY]-> RateLimitConfig

RateLimitConfig -[DOCUMENTED_IN]-> ConfigGuide.md

3. Vector search over retrieved documents:

Query: "configure rate limiting"

Rank chunks from:

- ConfigGuide.md

- API Gateway documentation

- Rate Limiting module docs

4. Assemble final answer:

- Graph structure: API Gateway → uses → Rate Limiting → configured by → RateLimitConfig

- Supporting text: relevant chunks from ConfigGuide.md

- Generate response citing both structure and documentation

The vector search ensures we find the right starting point ("API Gateway Service") even though the user said "API gateway."

The graph traversal surfaces the configuration relationship that might not appear in any single document.

The final vector ranking ensures the returned documentation chunks are the most relevant sections, not just any mention of rate limiting.

When Hybrid Matters Most

Hybrid retrieval becomes essential when:

- Terminology varies: Users describe entities differently than documentation does

- Information is distributed: No single document contains the complete answer

- Structure drives correctness: Dependencies, hierarchies, and relationships define the answer

- Semantic nuance matters: Not all structurally connected entities are equally relevant

These are precisely the conditions in enterprise knowledge management, technical documentation, research synthesis, and customer support—the domains where Graph RAG sees production use.

The Engineering Challenge

Building hybrid retrieval is more complex than pure vector search:

- Two or three databases to maintain

- Synchronization between vector and graph stores

- Tuning weights and fusion strategies

- Monitoring both vector and graph query performance

But the complexity is justified. Hybrid retrieval answers questions that pure approaches can't. It's not a nice-to-have—it's the only viable architecture for AI agents that need to reason about structured knowledge.

Further Reading

- Xiong, C. et al. (2020). "Answering Complex Open-Domain Questions with Multi-Hop Dense Retrieval." ICLR 2021.

- Yasunaga, M. et al. (2021). "QA-GNN: Reasoning with Language Models and Knowledge Graphs for Question Answering." NAACL 2021.

- Craswell, N. et al. (2020). "Overview of the TREC 2020 Deep Learning Track." TREC 2020.

This is Part 7 of the Graph RAG series, exploring how knowledge graphs solve the limitations of naive vector retrieval.

Previous: Microsoft GraphRAG: Architecture and Lessons Learned

Next: Graph RAG at Scale: Production Engineering Challenges

Comments ()